What if there was a solution to speed up algorithms, parallelise computing, parallelise Pandas and NumPy and integrate with libraries like sklearn and XGBoost? Then it would be called Dask.

There are many solutions available in the market which are parallelisable, but they are not clearly transformable into a big DataFrame computation. Today these companies tend to solve their problems either by writing custom code with low-level systems like MPI, or complex queuing systems or by heavy lifting with MapReduce or Spark.

Dask exposes low-level APIs to its internal task scheduler to execute advanced computations. This enables the building of personalised parallel computing system which uses the same engine that powers Dask’s arrays, DataFrames, and machine learning algorithms.

What Made Dask Tick

Dask emphasizes the following virtues:

- The ability to work in parallel with NumPy array and Pandas DataFrame objects

- integration with other projects.

- Distributed computing

- Faster operation because of its low overhead and minimum serialisation

- Runs resiliently on clusters with thousands of cores

- Real-time feedback and diagnostics

Dask’s 3 parallel collections namely Dataframes, Bags and Arrays, enables it to store data that is larger than RAM. Each of these is able to use data partitioned between RAM and a hard disk as well distributed across multiple nodes in a cluster.

Dask can enable efficient parallel computations on single machines by leveraging their multi-core CPUs and streaming data efficiently from disk. It can run on a distributed cluster.

Dask also allows the user to replace clusters with a single-machine scheduler which would bring down the overhead. These schedulers require no setup and can run entirely within the same process as the user’s session.

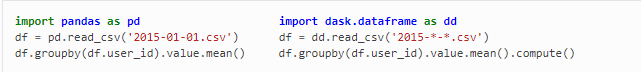

Dask vs Pandas

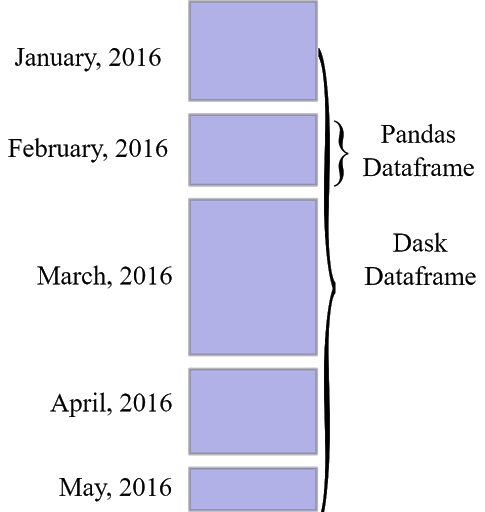

Dask DataFrames coordinate many Pandas DataFrames/Series arranged along the index. A Dask DataFrame is partitioned row-wise, grouping rows by index value for efficiency. These Pandas objects may live on disk or on other machines.

Dask DataFrame has the following limitations:

- It is expensive to set up a new index from an unsorted column.

- The Pandas API is very large. Dask DataFrame does not attempt to implement many Pandas features.

- Wherever Pandas lacked speed, that would carry on to Dask DataFrame as well.

Dask For ML

Any Machine Learning project would suffer from either of the following two factors

- Long training times

- Large Datasets

Dask can address the above problems in the following ways:

- Dask-ML makes it easy to use normal Dask workflows to prepare and set up data, then it deploys XGBoost or Tensorflow alongside Dask, and hands the data over.

- Replacing NumPy arrays with Dask arrays would make scaling algorithms easier.

- In all cases Dask-ML endeavours to provide a single unified interface around the familiar NumPy, Pandas, and Scikit-Learn APIs. Users familiar with Scikit-Learn should feel at home with Dask-ML.

Dask also has methods from sklearn for hyperparameter search such as GridSearchCV, RandomizedSearchCV etc.

from dask_ml.datasets import make_regression

from dask_ml.model_selection import train_test_split, GridSearchCV

Here is an implementation of sklearn with Dask for prediction models:

from sklearn.linear_model import ElasticNet

from dask_ml.wrappers import ParallelPostFit

el = ParallelPostFit(estimator=ElasticNet())

el.fit(Xtrain, ytrain)

preds = el.predict(Xtest)

Implementing joblib to parallelise workload:

import dask_ml.joblib

from sklearn.externals import joblib

Dask lets analysts handle large datasets (100GB+) even on relatively low-power devices without the need for configuration or setup.

Conclusion

Pandas is still the go-to option as long as the dataset fits into the user’s RAM. For functions that don’t work with Dask DataFrame, dask.delayed offers more flexibility can be used.

Dask is very selective in the way it uses the disk. It evaluates computations in a low-memory footprint by pulling in chunks of data from disk, going ahead with the necessary processing shedding off the intermediate values.

Dask’s active participation at the community level has contributed a lot to the way it has evolved from within this ecosystem. This enables the rest of the ecosystem to benefit from parallel and distributed computing with minimal coordination.

As a result, Dask development is pushed forward by developer communities. This shall ensure that the Python ecosystem will continue to evolve with great consistency.

Installing Dask with pip:

pip install “dask[complete]”

Check the Dask cheatsheet here