Deep Learning has sparked a renaissance in AI computing world, fuelled by the industry’s need to improve ML accuracy and performance rapidly.

Deep Learning has sparked a renaissance in AI computing world, fuelled by the industry’s need to improve ML accuracy and performance rapidly.

In spite of the accelerated innovation in the field of machine learning, a lack of consensus on benchmarking models still pose a major problem to the community. Enabling fair and useful benchmarking of ML software frameworks, ML hardware accelerators and ML platforms require a systematic machine learning benchmarking that is both representative of real-world use-cases, and useful for fair comparisons across different software and hardware platforms.

Last month, a consortium involving more than 40 leading companies and university researchers introduced MLPerf Inference v0.5, the first industry-standard machine learning benchmark suite for measuring system performance and power efficiency. This was a newer version to the one introduced back in 2018.

What Is MLPerf?

MLPerf began as a collaboration between researchers at Baidu, Google, Harvard, and Stanford based on their early experiences. Since then, MLPerf has grown to include many companies, a host of universities worldwide, along with hundreds of individual participants.

MLPerf is a machine learning benchmark standard, and suite, driven by the industry and academic research community at large.

This benchmark suite boasts of covering a wide range of applications from autonomous driving to natural language processing on platforms including smartphones, PCs, edge servers, and cloud computing platforms in the data centre.

The contributors have made sure that MLPerf Inference is not just any other benchmarking and has a great significance to the real-world applications.

The results of the latest edition of MLPerf assessments show that Google’s AI optimised TPU pods took top honours in state-of-the-art NLP and vision tasks

How TPU Pods Topped The Charts

Google Cloud Platform (GCP) has set three new performance records in the latest round of the MLPerf benchmark competition.

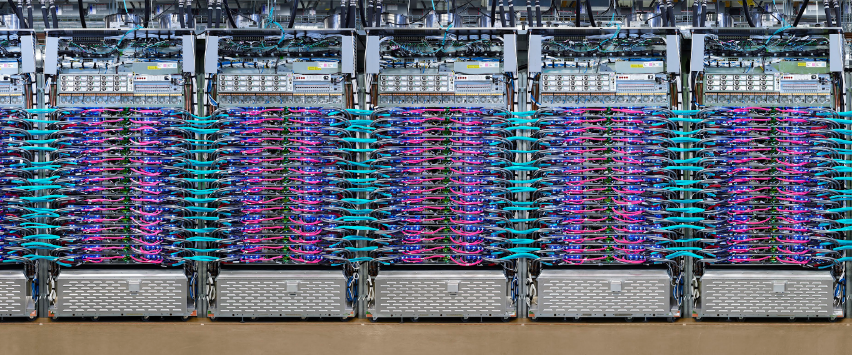

All three record-setting results ran on Cloud TPU v3 Pods, the latest generation of supercomputers that Google has built specifically for machine learning.

Google Cloud is the first public cloud provider to outperform on-premise systems when running large-scale, industry-standard ML training workloads of Transformer, Single Shot Detector (SSD), and ResNet-50.

The wide range of Cloud TPU Pod configurations, called slice sizes, used in the MLPerf benchmarks illustrates how Cloud TPU customers can choose the scale that best fits their needs. A Cloud TPU v3 Pod slice can include 16, 64, 128, 256, 512, or 1024 chips, and several of our open-source reference models.

Added to this successful demonstration, Google also provides discounts for one-year and three-year reservations of Cloud TPU Pod slices, offering businesses an even greater performance-per-dollar advantage.

Transformer networks and SSD (single shot detector) models are the core of many modern NLP and object detection tasks and Transformer and SSD categories, Cloud TPU v3 Pods trained models over 84% faster than the fastest on-premise systems in the MLPerf Closed Division.

A Summary Of MLPerf Goals

- Accelerate progress in ML via fair and useful measurement

- Serve both the commercial and research communities

- Enable a fair comparison of competing systems

- Encourage innovation to improve the state-of-the-art of ML

- Enforce replicability to ensure reliable results

- Use representative workloads, reflecting production use-cases

- Keep benchmarking effort affordable

Since 2012, the amount of compute used in the largest AI training runs has been increasing exponentially so as long as this trend continues, it’s worth preparing for the implications of systems far outside today’s capabilities.

The inference benchmarks were created thanks to the contributions of companies such as Arm, Cadence, Centaur Technology, Dividiti, Facebook, General Motors, Google, Habana Labs, Harvard University, Intel, MediaTek, Microsoft, Myrtle, Nvidia, Real World Insights, University of Illinois at Urbana-Champaign, University of Toronto, and Xilinx.

Know more about MLPerf here.