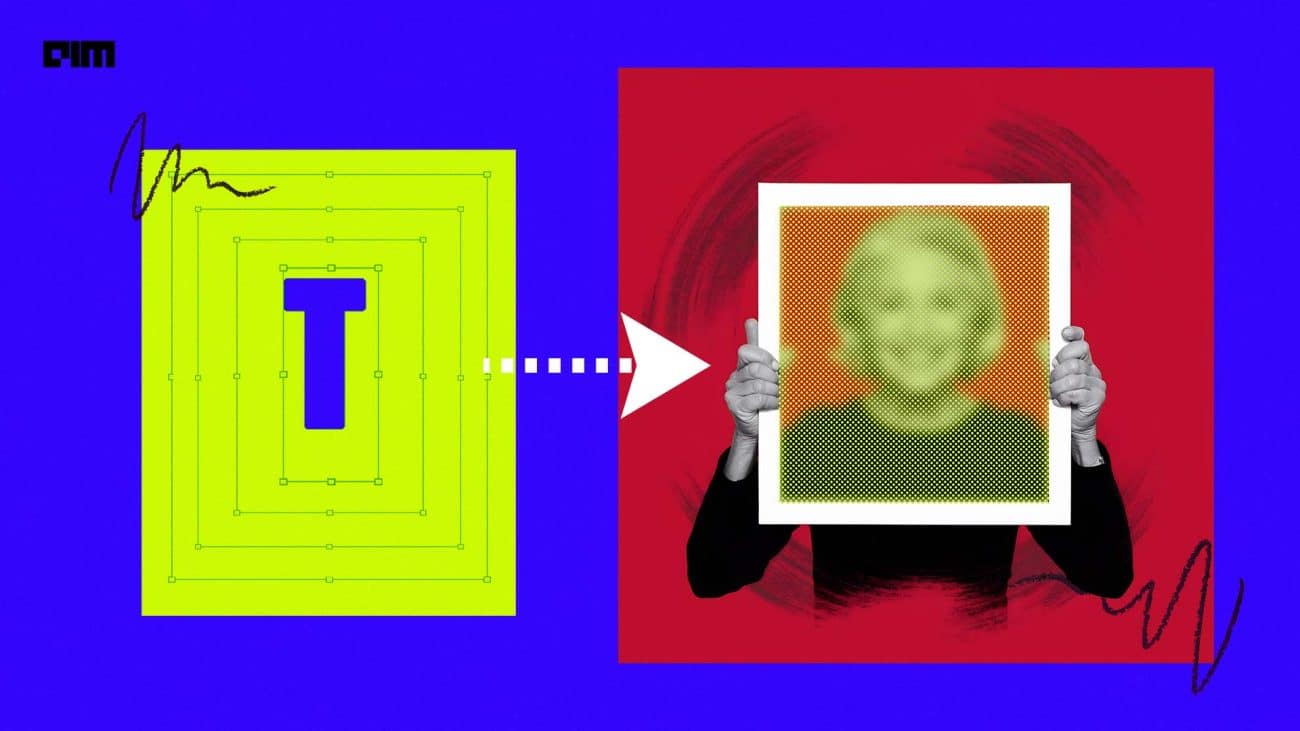

While you are busy zooming in and out to look at a picture more carefully on your smartphone, digital zooming tries to maintain the resolution by running algorithms in the background to fill in the missing pieces (pixels) of the image. Most of these image-up scaling techniques deploy machine learning where models learn mapping (selecting filters) by training on corresponding low and high-resolution images. In the learning stage, these low and high-resolution pairs of image patches are nothing but fabrications of the original images. When you zoom in, say 2x times, then, the size of a typical high-resolution patch is 6×6 and that of the synthetically down scaled low-resolution patch is 3×3.

These filling in and reconstruction is done with the help of image processing techniques like demosaicing, denoising and super-resolution(SR).

But In general, demosaicing algorithms perform well in flat regions of the image. However, it leads to conspicuous artifacts in the high-frequency texture regions and strong edges. This kind of problem is related to resolution limitation of the input Bayer image.

Whereas, most denoising algorithms not only eliminate noise, but also smooth the high-frequency detail and texture in the image. And further processing on the denoised image such as SR, the blur effect will be magnified and affect image quality. This may lead to unsatisfactory results when applying SR separately after demosaicing or denoising.

Problems With Current Image Processing

Photographers spend most of their time manipulating settings; for example, adjusting ISO sensitivity on their DSLR for a cleaner image. But, a typical phone user wants to have high-quality photos with a single touch.

Localized alignment issues demand the algorithm to be smart enough to perfectly estimate the motion due to unsteady hand movement, moving people and other factors as discussed above.

The complexity involved in interpolating randomly distributed data; random because the movements are random. So, the data is both dense and scanty and, this irregular spread of data on the grid makes super-resolution more elusive.

Usually, up scaling involves methods like nearest-neighbours, bi-linear or bi-cubic interpolation. These up scaling filters with linear interpolation methods have their limitations in reconstructing images involving complexity and end up with over-smoothening of the edges.

The sensors of the digital cameras are covered with filters which sometimes produce incomplete color sampling of images and eventually loss of resolution. Then there is noise in the original image which aggravates as pixel density becomes larger.

In the existing solutions, the image is first demosaiced to obtain a full color image. Then SR is performed to further enhance the resolution. However, demosaicing will introduce artifacts when the resolution is limited (such as color aliases, zippering and moire artifacts),and these artifacts will be magnified by the SR process.

So a group of Chinese researchers came up with a new method which shall address all the challenges that surface during pre and post processing of the images.

In this method firstly denoising and super resolution tasks are mapped jointly. Then the SR mosaic image is converted into a full color image. The network can be trained for joint mapping. The spatial information of the colors are extracted using convolution operation.

Now the jointly mapped part is mapped to a noise-free mosaic color maps, which is mapped to a SR three channel color image. A deep neural network ESRGAN(Enhance Super Resolution Generative Adversarial Network) is employed to implement these mappings.

The dataset PixelShift200 used for training were introduced by the researchers accounting for all the shortcomings existing dataset. Pixel Shift technology takes four samples of the same image and captures all the color information by making the camera sensor to move one pixel horizontally or vertically. This ensures that the sampled images follow the distribution of the natural images sampled by the camera.

Camera used for data collection: Sony A7R3

Datasets used for comparison: DIV2K

Network Optimization: Adam with β_1 = 0.9 and β_2 = 0.999 and the learning rate = 0.0001

Framework: PyTorch

Trained on: NVIDIA Titan Xp GPUs.

Need For A Joint Strategy

Previously there were models like Anchored Neighborhood Regression (ANR) which computed the compact representation of each patch over the learned dictionaries with a set of pre-computed projection matrices (filters). Then there was SRCNN which built upon deep Convolutional Neural Network (CNN) and learnt mapping through hidden convolutional layers.

What TENet tries to do is to alleviate the roadblocks that surface when the processing is being done. Every innovation that happens in the field of machine vision will be welcomed with wide spread arms since the applications don’t just stop at making selfies better but also will equip the self driving vehicles with much needed accuracy and precision.

As this new approach claims to heavy lift the analysis of three most important tasks demosaicing, denoising and super resolution at one go, more insights can be drawn about how enhancements tasks can be enhanced as well.

Read more about the work here.