A human eye has between six and seven million cone cells, containing one of three colour-sensitive proteins known as opsins. When photons of light hit these opsins, they change shape, triggering a cascade that produces electrical signals, which in turn transmit the messages to the brain for interpretation.

This whole process is a very complex phenomenon and making a machine to interpret this at a human level has always been a challenge. The motivation behind the modern-day machine vision system lies at the core of emulating human vision for recognising patterns, faces and rendering 2D imagery from a 3D world into 3D.

There is a lot of overlap between image processing and computer vision at the conceptual level and the jargon, often misunderstood, is being used interchangeably. Here we give a brief overview of the techniques and explain how they are different at the fundamental level.

Image Processing

Digital image processing was pioneered at NASA’s Jet Propulsion Laboratory in the late 1960s, to convert analogue signals from the Ranger spacecraft to digital images with computer enhancement. Now, digital imaging has a wide range of applications, with particular emphasis on medicine. Well-known uses for it include Computed Aided Tomography (CAT) scanning and ultrasounds.

Image Processing is mostly related to the usage and application of mathematical functions and transformations over images regardless of any intelligent inference being done over the image itself. It simply means that an algorithm does some transformations on the image such as smoothing, sharpening, contrasting, stretching on the image.

For a computer, an image is a two-dimensional signal, made up of rows and columns of pixels. An input of one form can sometimes be transformed into another. For instance, Magnetic Resonance Imaging (MRI), records the excitation of ions and transforms it into a visual image.

Here’s an example of smoothing images with Python:

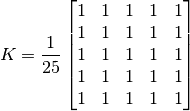

As for one-dimensional signals, images also can be filtered with various low-pass filters (LPF), high-pass filters (HPF), etc. An LPF helps in removing noise or blurring the image. An HPF filter helps in finding edges in an image.

These type of transformations using matrices are quite prevalent in machine learning algorithms like convolution neural network. Where a filter is convolved over an image(another matrix of pixel values) to detect edges or colour intensities.

Some techniques which are used in digital image processing include:

-

- Hidden Markov models

-

- Image editing and restoration

-

- Linear filtering and Bilateral filtering

- Neural networks

Computer Vision

Computer vision comes from modelling image processing using the techniques of machine learning. Computer vision applies machine learning to recognise patterns for interpretation of images. Much like the process of visual reasoning of human vision; we can distinguish between objects, classify them, sort them according to their size, and so forth. Computer vision, like image processing, takes images as input and gives output in the form of information on size, colour intensity etc.

Below are the components of a standard machine vision system:

-

- Camera

-

- Lighting devices

-

- Lens

-

- Frame grabber

-

- Image processing software

-

- Machine learning algorithms for pattern recognition

- Display screen or a robotic arm to carry out an instruction obtained from image interpretation.

For instance, a video camera mounted on a driverless car has to detect people in front of it and distinguish them from vehicles and other distinctive features. Or, we may want to measure the distance covered by a tennis player in a game.

Therefore, temporal information plays a major role in computer vision, much like it is with our own way of understanding the world.

The ultimate goal here is to use computers to emulate human vision, including learning and being able to make inferences and take actions based on visual inputs.

Conclusion

Image processing is a subset of computer vision. A computer vision system uses the image processing algorithms to try and perform emulation of vision at human scale. For example, if the goal is to enhance the image for later use, then this may be called image processing. And if the goal is to recognise objects, defect for automatic driving, then it can be called computer vision.