Data cleaning is one of the most hectic and time-consuming tasks in Data Science. There is no easy template that facilitates the cleaning of data as each data set is unique in its own way consisting of noises that need to be carefully filtered out.

This article will walk you through this laborious process in the simplest way possible explaining how to perform Exploratory Data Analysis and clean the data.

MachineHack is back again with an exciting hackathon for all data science enthusiasts. This new hackathon, in partnership with Imarticus Learning, challenges the data science community to predict the resale value of a car from various features. Predicting The Costs Of Used Cars Hackathon consists of data collected from various sources across India.

No surprises here, we will use the dataset from the above-mentioned hackathon to study the process of exploring and cleaning data.

Head to MachineHack, sign up and start the hackathon to get the dataset. Once you have the dataset follow along with the article.

Exploratory Data Analysis

Exploratory Data Analysis or EDA is the first and foremost of all tasks that a dataset goes through. EDA lets us understand the data and thus helping us to prepare it for the upcoming tasks.

Some of the key steps in EDA are identifying the features, a number of observations, checking for null values or empty cells etc.

Importing the dataset

import pandas as pd

training_set = pd.read_excel("Data_Train.xlsx")

test_set = pd.read_excel("Data_Test.xlsx")

We now have two data frames, one consisting of the data to be trained and the other for predicting the target value which in this case is the price of the car.

Identifying the number of features or columns

#checking the number of features in the Datasets

print("\n\nNumber of features in the datasets :\n",'#' * 40)

print("\nTraining Set : \n",'-' * 20, len(training_set.columns))

print("\nTest Set : \n",'-' * 20,len(test_set.columns))

Identifying the features or columns

#checking the features in the Datasets

print("\n\nFeatures in the datasets :\n",'#' * 40)

print("\nTraining Set : \n",'-' * 20, list(training_set.columns))

print("\nTest Set : \n",'-' * 20,list(test_set.columns))

Identifying the data types of features

#checking the data types of features

print("\n\nDatatypes of features in the datasets :\n",'#' * 40)

print("\nTraining Set : \n",'-' * 20,"\n", training_set.dtypes)

print("\nTest Set : \n",'-' * 20,"\n",test_set.dtypes)

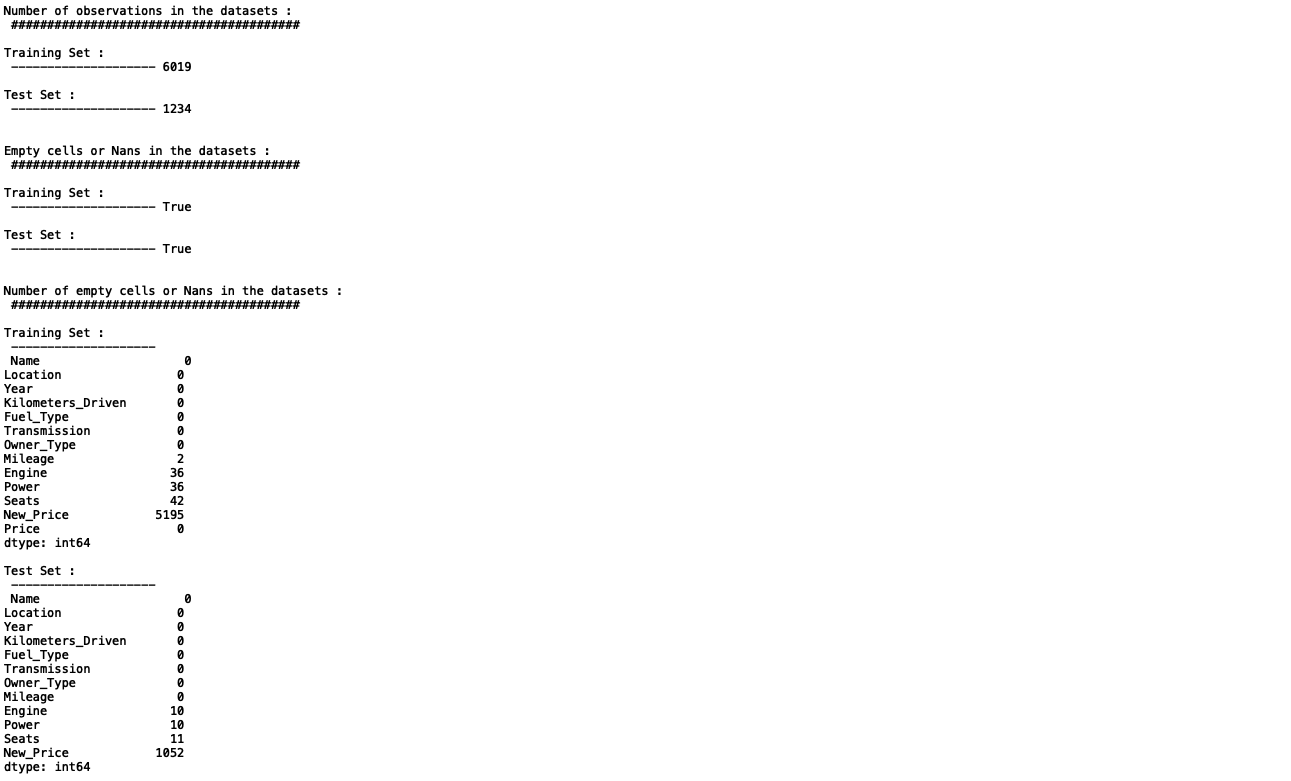

Identifying the number of observations

#checking the number of rows

print("\n\nNumber of observations in the datasets :\n",'#' * 40)

print("\nTraining Set : \n",'-' * 20,len(training_set))

print("\nTest Set : \n",'-' * 20,len(test_set))

Checking if the dataset has empty cells or samples

#checking for NaNs or empty cells

print("\n\nEmpty cells or Nans in the datasets :\n",'#' * 40)

print("\nTraining Set : \n",'-' * 20,training_set.isnull().values.any())

print("\nTest Set : \n",'-' * 20,test_set.isnull().values.any())

Identifying the number of empty cells by features or columns

#checking for NaNs or empty cells by features

print("\n\nNumber of empty cells or Nans in the datasets :\n",'#' * 40)

print("\nTraining Set : \n",'-' * 20,"\n", training_set.isnull().sum())

print("\nTest Set : \n",'-' * 20,"\n",test_set.isnull().sum())

All the above code blocks can be used as templates for any datasets.

Exploring Categorical features

The below code block explores the categorical features, identifying the unique categories in both the test and training_sets combined.

#combining training set and test set data

all_brands = list(training_set.Name) + list(test_set.Name)

all_locations = list(training_set.Location) + list(test_set.Location)

all_fuel_types = list(training_set.Fuel_Type) + list(test_set.Fuel_Type)

all_transmissions = list(training_set.Transmission) + list(test_set.Transmission)

all_owner_types = list(training_set.Owner_Type) + list(test_set.Owner_Type)

print("\nNumber Of Unique Values In Name : \n ", len(set(all_brands)))

#print("\nThe Unique Values In Name : \n ", set(all_brands))

print("\nNumber Of Unique Values In Location : \n ", len(set(all_locations)))

print("\nThe Unique Values In Location : \n ", set(all_locations) )

print("\nNumber Of Unique Values In Fuel_Type : \n ", len(set(all_fuel_types)))

print("\nThe Unique Values In Fuel_Type : \n ", set(all_fuel_types) )

print("\nNumber Of Unique Values In Transmission : \n ", len(set(all_transmissions)))

print("\nThe Unique Values In Transmission : \n ", set(all_transmissions) )

print("\nNumber Of Unique Values In Owner_Type : \n ", len(set(all_owner_types)))

print("\nThe Unique Values In Owner_Type : \n '' ,set(all_owner_types) )

On running all the above code blocks at once you will get this long informatory output in your console just like the ones shown below :

We now know a little about the dataset we have in hand, we will now proceed to clean the data.

Data Cleaning

In this stage, we will remove unwanted data or noises from the data set to prepare it for the data preprocessing stage. The goal is to clean the data in such a way that all data can be successfully converted into a numerical type in the preprocessing stage.

By performing Exploratory data analysis, we found out that the majority of the features in the data set are objects. These features contain multiple strings of data in which most of them are useless or insignificant for a predictive model. We will traverse through each of those features cleaning one by one for both the training set and the test_set given.

Feature/Column : Name

We will start with the column “Name”. By going through the data, we can see that each cell in the column consists of multiple words that provide insights about both the brand and the model of the car. We can thus simplify the dataset by splitting this feature into two different features Brand and Model. We will then replace the “Name ” feature with the 2 derived features.

Let’s have a look at the code :

#"""Splitting name into 2 features, brand and model"""

#Training Set

names = list(training_set.Name)

brand = []

model = []

for i in range(len(names)):

try:

brand.append(names[i].split(" ")[0].strip())

try:

model.append(" ".join(names[i].split(" ")[1:]).strip())

except:

pass

except:

print("ERR ! - ", names[i], "@" , i)

training_set["Brand"] = brand

training_set["Model"] = model

training_set.drop(labels = ['Name'], axis = 1, inplace = True)

#Test Set

names = list(test_set.Name)

brand = []

model = []

for i in range(len(names)):

try:

brand.append(names[i].split(" ")[0].strip())

try:

model.append(" ".join(names[i].split(" ")[1:]).strip())

except:

pass

except:

print("ERR ! - ", names[i], "@" , i)

test_set["Brand"] = brand

test_set["Model"] = model

test_set.drop(labels = ['Name'], axis = 1, inplace = True)

Feature/Column : Mileage

In the given dataset, you will find that each of the values in the column ‘Mileage’ , the unit is also appended to the value. The unit makes no sense to the machines or the model. So we will remove it and convert the feature to float type with the following code.

#""" Removing the texts and converting to integer''"""

# Training Set

mileage = list(training_set.Mileage)

for i in range(len(mileage)):

try :

mileage[i] = float(mileage[i].split(" ")[0].strip())

except:

mileage[i] = np.nan

training_set['Mileage'] = mileage

Repeat the above code block for the test set by replacing all training_set with test_set.

Feature/Column : Engine

Similar to the column Mileage, Engine column also has the units in its values. We will remove the units and will convert the feature to int type as we can see all the values are integers.

#""" Removing the texts and converting to integer''"""

# Training Set

engine = list(training_set.Engine)

for i in range(len(engine)):

try :

engine[i] = int(engine[i].split(" ")[0].strip())

except:

engine[i] = np.nan

training_set['Engine'] = engine

Repeat the above code block for the test set by replacing all training_set with test_set.

Feature/Column : Power

We will do the same for the feature Power.

#""" Removing the texts and converting to integer"""

# Training Set

power = list(training_set.Power)

for i in range(len(power)):

try :

power[i] = float(power[i].split(" ")[0].strip())

except:

power[i] = np.nan

training_set['Power'] = power

Repeat the above code block for the test set by replacing all training_set with test_set.

Feature/Column : New_Price

Since this feature has a huge number of null values compared to the entire dataset, we will choose to remove the feature itself from the datasets. (It is also possible to fill the nulls with zeros or unit values to check its significance in predictions.)

training_set.drop(labels = ['New_Price'], axis = 1, inplace = True)

test_set.drop(labels = ['New_Price'], axis = 1, inplace = True)

Reordering The Dataset

We have now cleaned the dataset, Lets reorder the columns and have a look at the new and cleaner dataset.

#Re-ordering the columns

training_set = training_set[['Brand', 'Model', 'Location', 'Year', 'Kilometers_Driven', 'Fuel_Type', 'Transmission',

'Owner_Type', 'Mileage', 'Engine', 'Power', 'Seats', 'Price']]

test_set = test_set[['Brand', 'Model', 'Location', 'Year', 'Kilometers_Driven', 'Fuel_Type', 'Transmission',

'Owner_Type', 'Mileage', 'Engine', 'Power', 'Seats']]

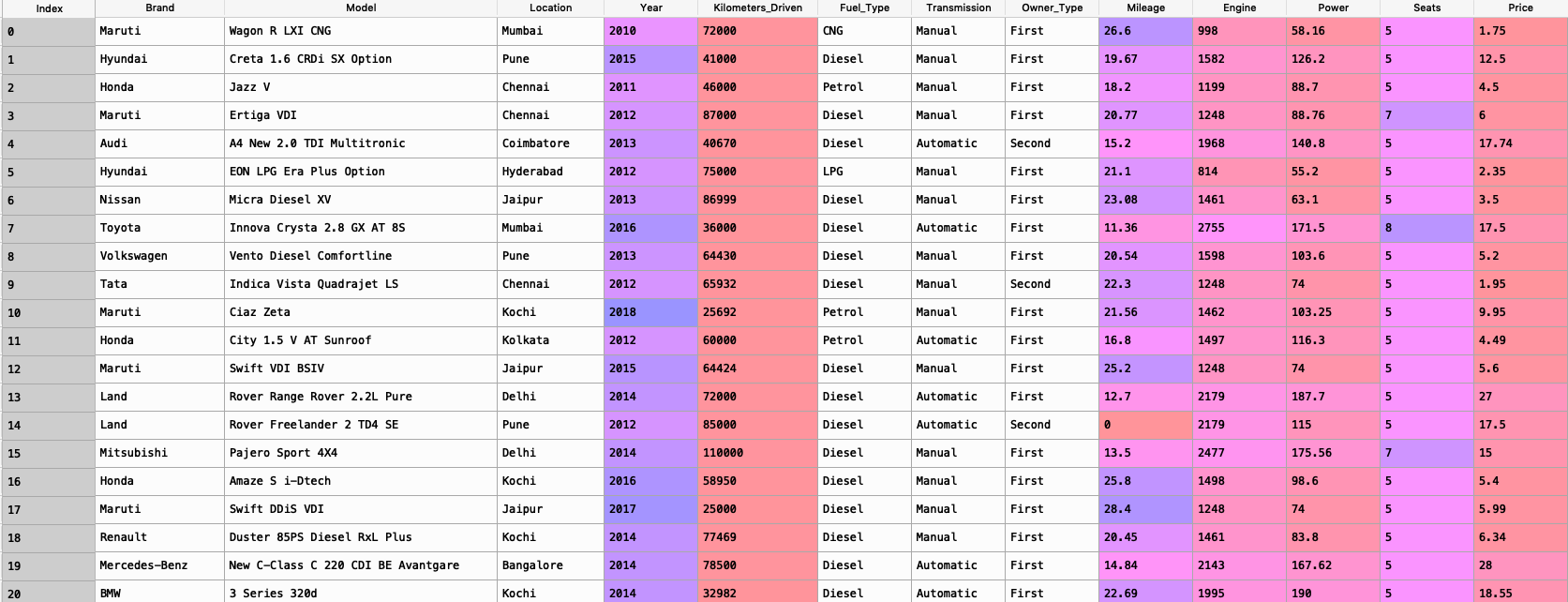

Original Training Set :

Cleaned Training Set :

The entire cleaning process discussed above can be done by calling the below method:

def restructure(data):

names = list(data.Name)

brand = []

model = []

for i in range(len(names)):

try:

brand.append(names[i].split(" ")[0])

try:

model.append(" ".join(names[i].split(" ")[1:]).strip())

except:

pass

except:

print("ERR ! - ", names[i], "@" , i)

mileage = list(data.Mileage)

for i in range(len(mileage)):

try :

mileage[i] = float(mileage[i].split(" ")[0].strip())

except:

mileage[i] = np.nan

engine = list(data.Engine)

for i in range(len(engine)):

try :

engine[i] = int(engine[i].split(" ")[0].strip())

except:

engine[i] = np.nan

power = list(data.Power)

for i in range(len(power)):

try :

power[i] = float(power[i].split(" ")[0].strip())

except:

power[i] = np.nan

data['New_Price'].fillna(0, inplace = True)

newp = list(data['New_Price'])

for i in range(len(newp)):

if newp[i] == 0:

newp[i] = float(newp[i])

continue

elif 'Cr' in newp[i]:

newp[i] = float(newp[i].split()[0].strip()) * 100

elif 'Lakh' in newp[i]:

newp[i] = float(newp[i].split()[0].strip())

#Re-ordering the columns

restructured = pd.DataFrame({'Brand': brand,

'Model':model,

'Location': data['Location'],

'Year':data['Year'] ,

'Kilometers_Driven':data['Kilometers_Driven'],

'Fuel_Type':data['Fuel_Type'],

'Transmission':data['Transmission'],

'Owner_Type':data['Owner_Type'],

'Mileage':mileage,

'Engine':engine,

'Power':power,

'Seats':data['Seats'],

'New_Price':newp

})

if 'Price' in data.columns:

restructured['Price'] = data['Price']

return restructured

else:

return restructured

We have now successfully cleaned our dataset of noises. The dataset still consists of empty data points and categories that need to be encoded which we will cover in the Data Preprocessing and Modelling part.