AI accelerators have been at the forefront in the race of AI. Within the whole acceleration of AI in last few years, 2 key reasons stand out. Firstly, large amount of data that we have stored digitally helps to train neural networks that was not possible a decade ago.

Secondly, neural networks require large processing power to train and traditional processors do not have that level of abilities. Just a simple image recognition of cats through traditional CPU’s might take days or weeks to train.

For AI to go beyond where it is now, the key element is that developers have freedom to train neural nets quickly, make mistakes, learn from those mistakes and polish their algorithms. For that, processing power have to increase. And that’s exactly what most tech giants are after, and not just traditional chip makers.

Here we list down 5 chips (in alphabetic order)especially designed for AI that made headlines this year.

AMD Radeon Instinct

Radeon Instinct is AMD’s brand of deep learning oriented GPUs. It replaced AMD’s FirePro S brand in 2016. Compared to the Radeon brand of mainstream consumer/gamer products, the Radeon Instinct branded products are intended to accelerate deep learning, artificial neural network, and high-performance computing/GPGPU applications.

Radeon Instinct is AMD’s brand of deep learning oriented GPUs. It replaced AMD’s FirePro S brand in 2016. Compared to the Radeon brand of mainstream consumer/gamer products, the Radeon Instinct branded products are intended to accelerate deep learning, artificial neural network, and high-performance computing/GPGPU applications.

Apple A11 Bionic Neural Engine

The Apple A11 Bionic is a 64-bit ARM-based system on a chip (SoC), designed by Apple Inc. and manufactured by TSMC. It first appeared in the iPhone 8, iPhone 8 Plus, and iPhone X. The A11 includes dedicated neural network hardware that Apple calls a “Neural Engine”. This neural network hardware can perform up to 600 billion operations per second and is used for Face ID, Animoji and other machine learning tasks. The neural engine allows Apple to implement neural network and machine learning in a more energy-efficient manner than using either the main CPU or the GPU.

The Apple A11 Bionic is a 64-bit ARM-based system on a chip (SoC), designed by Apple Inc. and manufactured by TSMC. It first appeared in the iPhone 8, iPhone 8 Plus, and iPhone X. The A11 includes dedicated neural network hardware that Apple calls a “Neural Engine”. This neural network hardware can perform up to 600 billion operations per second and is used for Face ID, Animoji and other machine learning tasks. The neural engine allows Apple to implement neural network and machine learning in a more energy-efficient manner than using either the main CPU or the GPU.

Google Tensor Processing Unit

A tensor processing unit (TPU) is an application-specific integrated circuit (ASIC) developed by Google specifically for machine learning. Compared to a graphics processing unit, it is designed for a high volume of low precision computation (e.g. as little as 8-bit precision) with higher IOPS per watt, and lacks hardware for rasterisation/texture mapping. The chip has been specifically designed for Google’s TensorFlow framework. However, Google still uses CPUs and GPUs for other types of machine learning. Other AI accelerator designs are appearing from other vendors also and are aimed at embedded and robotics markets.

A tensor processing unit (TPU) is an application-specific integrated circuit (ASIC) developed by Google specifically for machine learning. Compared to a graphics processing unit, it is designed for a high volume of low precision computation (e.g. as little as 8-bit precision) with higher IOPS per watt, and lacks hardware for rasterisation/texture mapping. The chip has been specifically designed for Google’s TensorFlow framework. However, Google still uses CPUs and GPUs for other types of machine learning. Other AI accelerator designs are appearing from other vendors also and are aimed at embedded and robotics markets.

Huawei Kirin 970

Kirin 970 is powered by an 8-core CPU and a new generation 12-core GPU. Built using a 10nm advanced process, the chipset packs 5.5 billion transistors into an area of only one cm². HUAWEI’s flagship Kirin 970 is HUAWEI’s first mobile AI computing platform featuring a dedicated Neural Processing Unit (NPU). Compared to a quad-core Cortex-A73 CPU cluster, the Kirin 970’s heterogeneous computing architecture delivers up to 25x the performance with 50x greater efficiency.

Kirin 970 is powered by an 8-core CPU and a new generation 12-core GPU. Built using a 10nm advanced process, the chipset packs 5.5 billion transistors into an area of only one cm². HUAWEI’s flagship Kirin 970 is HUAWEI’s first mobile AI computing platform featuring a dedicated Neural Processing Unit (NPU). Compared to a quad-core Cortex-A73 CPU cluster, the Kirin 970’s heterogeneous computing architecture delivers up to 25x the performance with 50x greater efficiency.

IBM Power9

Recently launched by IBM, Power9 is a chip which has a new systems architecture that is optimized for accelerators used in machine learning. Intel makes Xeon CPUs and Nervana accelerators and NVIDIA makes Tesla accelerators. IBM’s Power9 is literally the Swiss Army knife of ML acceleration as it supports an astronomical amount of IO and bandwidth, 10X of anything that’s out there today

Recently launched by IBM, Power9 is a chip which has a new systems architecture that is optimized for accelerators used in machine learning. Intel makes Xeon CPUs and Nervana accelerators and NVIDIA makes Tesla accelerators. IBM’s Power9 is literally the Swiss Army knife of ML acceleration as it supports an astronomical amount of IO and bandwidth, 10X of anything that’s out there today

Intel Nervana

The Nervana ‘Neural Network Processor’ uses a parallel, clustered computing approach and is built pretty much like a normal GPU. It has 32 GB of HBM2 memory dedicated in 4 different 8 GB HBM2 stacks, all of which is connected to 12 processing clusters which contain further cores (the exact count is unknown at this point). Total memory access speeds combine to a whopping 8 terabits per second.

The Nervana ‘Neural Network Processor’ uses a parallel, clustered computing approach and is built pretty much like a normal GPU. It has 32 GB of HBM2 memory dedicated in 4 different 8 GB HBM2 stacks, all of which is connected to 12 processing clusters which contain further cores (the exact count is unknown at this point). Total memory access speeds combine to a whopping 8 terabits per second.

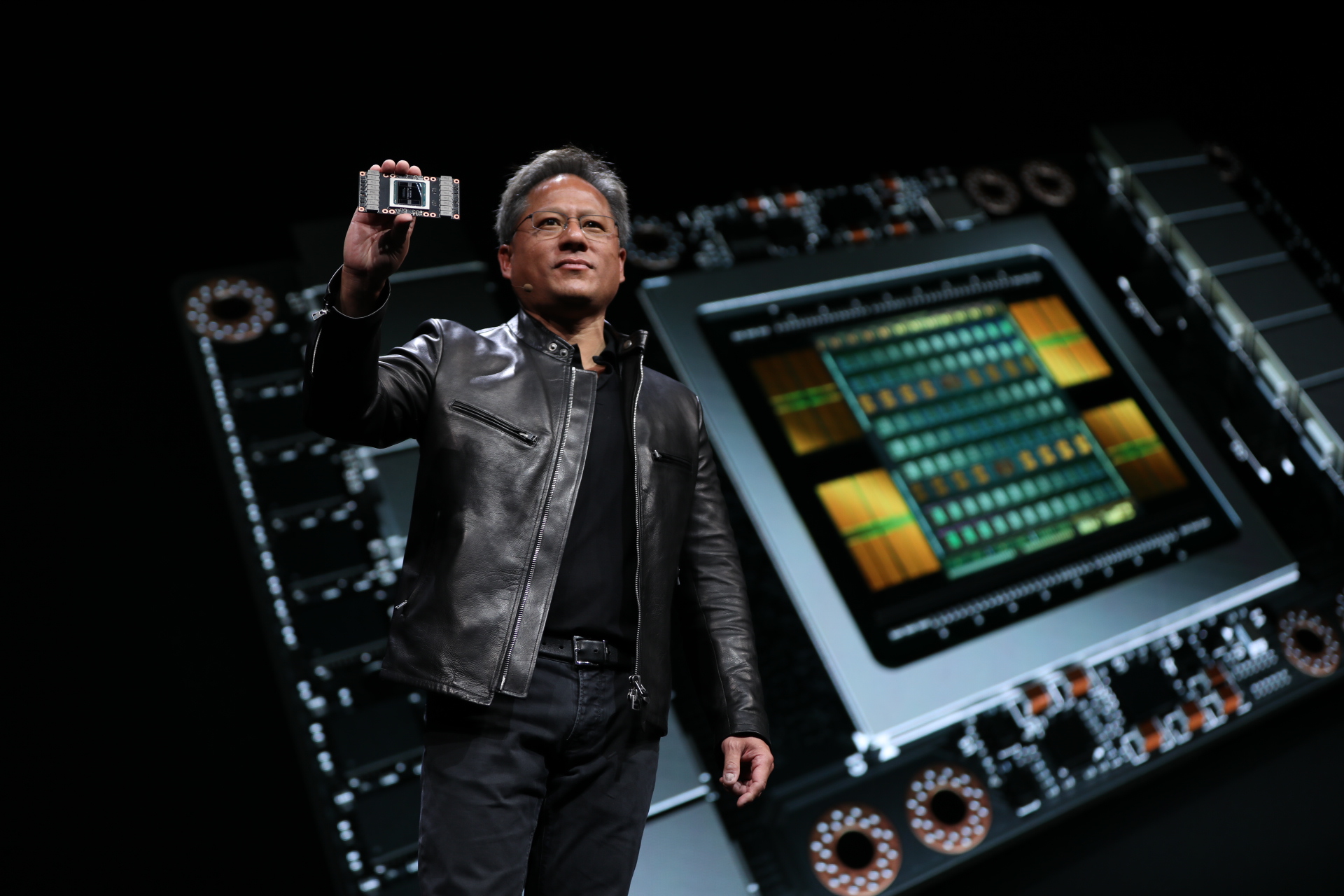

Nvidia Tesla V100

NVIDIA® Tesla® V100 is the world’s most advanced data center GPU ever built to accelerate AI, HPC, and graphics. Powered by NVIDIA Volta™, the latest GPU architecture, Tesla V100 offers the performance of 100 CPUs in a single GPU—enabling data scientists, researchers, and engineers to tackle challenges that were once impossible.

NVIDIA® Tesla® V100 is the world’s most advanced data center GPU ever built to accelerate AI, HPC, and graphics. Powered by NVIDIA Volta™, the latest GPU architecture, Tesla V100 offers the performance of 100 CPUs in a single GPU—enabling data scientists, researchers, and engineers to tackle challenges that were once impossible.