If the future of deep learning depends on finding good data, then these popular, high-quality datasets will definitely come in handy towards accelerating AI research. While we have seen a lot of breakthroughs in the field of AI, publicly available datasets, especially in the field of video, text, sentiment or even natural language understanding have so far been few.

To fully exploit the possibilities of deep learning, quality data is a must, emphasizes a report from Capgemini. Because when one uses data for Machine Learning and Artificial Intelligence, one has to go beyond the standard criteria of data quality. Stressing on the importance of quality data for AI and ML, John Paul Mueller and Luca Massaron from Capgemini emphasized how with traditional data analysis, when bad data is discovered our set, one can exclude it and start over. But this is more problematic with AI? When bad data is detected, one has to restart the whole learning process from the beginning, which is time and cost-intensive.

Even though open source tools such as TensorFlow, Spark and Gluon, massively high computational power are triggering an AI revolution, quality datasets are hard to come by. Since data collection and labeling is the hardest task in AI research, a quality dataset can be used for validation and even building a more tailored solution.

Analytics India Magazine lists down top 10 quality datasets that can be used for benchmarking deep learning algorithms:

MNIST: Let’s start with one of the most popular datasets MNIST for Deep Learning enthusiasts put together by Yann LeCun and a Microsoft & Google Labs researcher. The MNIST database of handwritten digits has a training set of 60,000 examples, and a test set of 10,000 examples. This training set has been culled from a larger set available from NIST Special Database 19 which contains digits, uppercase and lowercase handwritten letters.. It is usually pegged as a good database to start with for those who want to try learning techniques and pattern recognition methods on real-world data while spending minimal efforts on preprocessing and formatting. According to this paper, the MNIST dataset has become a standard benchmark for learning, classification and computer vision systems. There are four files available on the site. The links are here: training set images; training set labels; test set images; test set labels.

EMNIST: an extension of MNIST to handwritten letters: While the MNIST dataset has become the go-to repository for people starting in Deep Learning and in particular machine learning research. Extended MNIST (EMNIST) is a variant of the NSIT dataset. The EMNIST dataset takes the format of “Resized and Resampled” as in (e) of the figure. According to Microsoft Student Partner Chih Han Chen, this dataset is designed as a more advanced replacement for existing neural networks and systems. There are different parts within the dataset that focus only on numbers, small or capital English letters. Check out the download link.

CLEVR: This Diagnostic Dataset for Compositional Language and Elementary Visual Reasoning is essentially a diagnostic dataset that tests a range of visual reasoning abilities. Led by Stanford’s Fei Fei Li, this dataset was developed to enable research in developing machines which can sense and see about them. This dataset contains minimal biases and has detailed annotations describing the kind of reasoning each question requires. Containing a training set of 70,000 images and 699,989 questions, a validation set of 15,000 images and 149,991 questions and a test set of 15,000 images and 14,988 questions. Developed during a summer internship at Facebook AI Research, this dataset is specifically developed for enabling a detailed analysis of Visual Question Answering [VQA] systems to perform visual reasoning. CLEVR contains 100k rendered images and about one million automatically generated questions, of which 853k are unique. Check out the download link.

JFLEG: This new corpus A Fluency Corpus and Benchmark for Grammatical Error Correction is developed for evaluating grammatical error correction (GEC). Unlike other corpora, it represents a broad range of language proficiency levels and uses holistic fluency edits to not only correct grammatical errors but also make the original text more native sounding. The paper cites the types of corrections made and also benchmarks four leading GEC systems, identifying specific areas in which they do well and how they can improve. This dataset is pegged as the new gold standard to properly assess the current state of GEC and build machines that can automatically correct grammar. While there is a long way to go in acing the proficiency of a human proofreader, neural methods can help improve the GEC systems and set a benchmark with better edits. Check out the download link.

Google’s Open Images: Last year, MountainView search giant unveiled their Open Images dataset that contained a whopping “9 million URLs to images that have been annotated with labels spanning over 6,000 categories”. This is probably the largest dataset available for training and practical as possible, notes the blog. The labels cover more real-life entities than the 1000 ImageNet classes and it has enough images to train a deep neural network from scratch and the images are listed with a Creative Commons Attribution license. Developed in collaboration with CMU, Cornell university and Google, this dataset is an extremely useful tool for the AI community. Check out the download link.

STL-10 dataset: This is an image recognition dataset inspired by CIFAR-10 dataset with some improvements. With a corpus of 100000 unlabeled images and 500 training images, this dataset is best for developing unsupervised feature learning, deep learning, self-taught learning algorithms. Unlike CIFAR-10, the dataset has a higher resolution which makes it a challenging benchmark for developing more scalable unsupervised learning methods. Check out the download link here.

Uber 2B Trip Dataset: As the name signifies, this repository contains data from over 4.5 million Uber pickups in NYC, from April to September 2014, and 14.3 million more Uber pickups from January to June 2015. The corpus also contains trip-level data on 10 other for-hire vehicle (FHV) companies, as well as aggregated data for 329 FHV companies, is also included. Interestingly, this data has already been used by noted mathematician Nate Silver of FiveThirtyEight to uncover traffic woes in NYC triggered by Uber and mapping the ride-hailing startup’s more serviced areas in NYC. Check out the download link here.

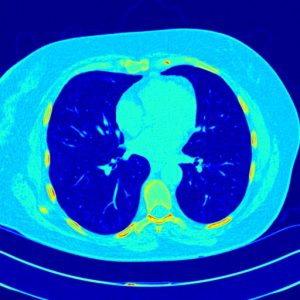

Data Science Bowl 2017: So what if you didn’t participate in the recent Data Science Bowl. This year’s competition with a $1 million prize has a dataset of thousands of high-resolution lung scans provided by the National Cancer Institute. The dataset presents a thousand low-dose CT images from high-risk patients in DICOM format and each image contains a series with multiple axial slices of the chest cavity. Here is the download link.

Maluuba NewsQA Dataset: With an explosion in news, Maluuba — Montreal headquartered, Microsoft owned AI research firm put together a crowd-sourced machine reading dataset for developing algorithms capable of answering questions requiring human-level comprehension and reasoning skills. This dataset of CNN news articles has over 100,000 question-answer pairs. Interestingly, the questions are composed and written by human users in natural language and the documents are sourced from CNN news articles. This dataset is pegged to be more challenging and was released last year. Those interested in researching in Natural Language Understanding, they can check out the dataset which is created by a team of human users. Check out the download link.

Youtube 8M Dataset: When it comes to video analysis, this is the biggest dataset available for training with a whopping 8 million Youtube videos tagged with objects within them. Done in collaboration with Google, the YouTube-8M is a large-scale labeled video dataset developed to push research on video understanding, noise data modeling, transfer learning, and domain adaptation approaches for video. The 2017 challenge done as a Kaggle competition had a $100,000 prize pool. Check out the download link here.