For someone who has an interest in Data Science, Regression is probably one of the first Predictive Models that s/he may begin with. Regression is easier to understand and even easier to implement considering all those ready made packages and libraries all set to perform complex mathematical computations effortlessly without leaving his or her brain squashed.

Having said that, there are lots of regression models or algorithms that one can use while building Machine Learning applications. But one of the most challenging things is to find a model with the least variances in predictions. Even after going through rigorous steps of model selection to come up with a best fitting model you may still face high variance in output on executing the same model that is tested against the same data multiple times.

The StackingCVRegressor

Ensemble learning is a technique that combines the skills of different algorithms in predicting for a given sample of data. A technique to combine the best of multiple algorithms which can give more stable predictions with very less variance than what we get with a single regressor. The StackingCVRegressor is one such algorithm that allows us to collectively use multiple regressors to predict.

The StackingCVRegressor is provided by the mlxtend.regressor package in Python.

How StackingCVRegressor Predicts

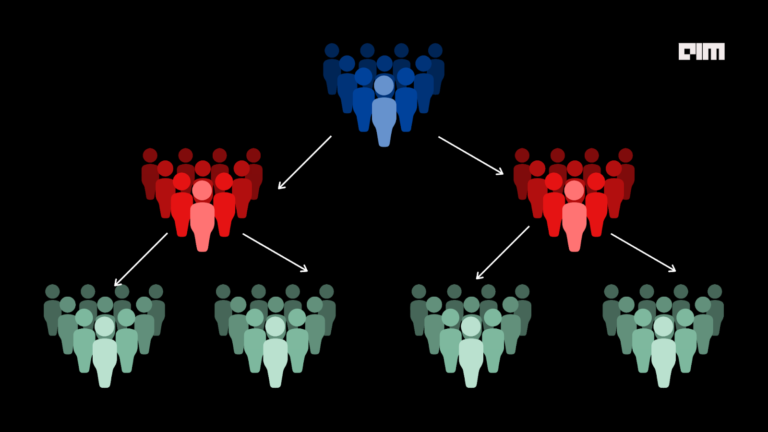

The StackingCVRegressor has two levels of regressors, level one regressors and level two regressor and uses a concept called out-of-fold predictions.

It starts by splitting the dataset into k folds in k successive rounds and using k-1 folds to fit the level one regressors. The remaining one fold is then used by the first level regressors to predict. These predictions then flow into the level two regressor as input. Once the training is finished the entire dataset is used to fit the level one regressors. Click here to go to the official GitHub for StackingCVRegressor.

Using The StackingCVRegressor In Python

Let us get our hands dirty by writing a Python code to build a regression model using the StackingCVRegressor.

We will be using the data from one of the hottest hackathons on the Internet, Predicting Restaurant Food Cost Hackathon by Machinehack.

Getting the Datasets

Head to MACHINEHACK’s Predicting Restaurant Food Cost Hackathon by clicking here. Sign Up and start the course. You will find the data set as PARTICIPANTS_DATA_FINAL in the Attachments section.

Having trouble finding the data set? Click here to go through this simple tutorial to help yourself.

Lets Code!

Since this tutorial is to help you with the StackingCVRegressor, I will be skipping all the processes till Modelling (excluding). You can use the following resources to help you till this stage.

- A Complete Guide to Cracking The Predicting Restaurant Food Cost Hackathon By MachineHack

- Hands-on Tutorial On Data Pre-processing In Python

After following through all the stages till Modelling, You are ready to build the StackingCVRegressor for the dataset in hand.

The data sets after preprocessing will look like what is shown below :

Creating a New Training Set and Validation Set

For validation, we will split the new_data_train into two data sets data_train and data_val. Execute the following code block to generate new dependent and independent variable sets from the newly formed data_train and data_val datasets.

from sklearn.model_selection import train_test_split

data_train, data_val = train_test_split(new_data_train, test_size = 0.2, random_state = 2)

#Classifying Independent and Dependent Features

#_______________________________________________

#Dependent Variable

Y_train = data_train.iloc[:, -1].values

#Independent Variables

X_train = data_train.iloc[:,0 : -1].values

#Independent Variables for Test Set

X_test = data_val.iloc[:,0 : -1].values

Applying Feature Scaling

#Feature Scaling

#________________

from sklearn.preprocessing import StandardScaler

sc = StandardScaler()

X_train = sc.fit_transform(X_train)

X_test = sc.transform(X_test)

Y_train = Y_train.reshape((len(Y_train), 1)) #reshaping to fit the scaler

Y_train = sc.fit_transform(Y_train)

Y_train = Y_train.ravel()

RMLSE For Model Evaluation

def score(y_pred, y_true):

error = np.square(np.log10(y_pred +1) - np.log10(y_true +1)).mean() ** 0.5

score = 1 - error

return score

actual_cost = list(data_val['COST'])

actual_cost = np.asarray(actual_cost)

We will first evaluate the performances of individual algorithms on our data set.

eXtreme Gradient Boosting

######################################################################

#eXtreme Gradient Boosting

############################################################################

#Importing and Initializing the Regressor

from xgboost import XGBRegressorxgbr = XGBRegressor()

#Fitting the data to the regressor

xgbr.fit(X_train, Y_train)

#Predicting the Test set results

y_pred_xgbr = sc.inverse_transform(xgbr.predict(X_test))

#Evaluating

print("\n\nXGBoost SCORE : ", score(y_pred_xgbr, actual_cost))

Output:

XGBoost SCORE : 0.8132790047309137

Random Forest Regression

######################################################################

#Random Forest Regression

############################################################################

#Importing and Initializing the Regressor

from sklearn.ensemble import RandomForestRegressor

rf = RandomForestRegressor(n_estimators=100, random_state=1)

#Fitting the data to the regressor

rf.fit(X_train, Y_train)

#Predicting the Test set results

y_pred_rf = sc.inverse_transform(rf.predict(X_test))

#Evaluating

print("\n\nRandom Forest SCORE : ", score(y_pred_rf, actual_cost))

Output:

Random Forest SCORE : 0.8241776753311784

Linear Regression

######################################################################

#Linear Regression

############################################################################

#Importing and Initializing the Regressor

from sklearn.linear_model import LinearRegression

lr = LinearRegression()

#Fitting the data to the regressor

lr.fit(X_train,Y_train)

#Predicting the Test set results

Y_pred_linear = sc.inverse_transform(lr.predict(X_test))

#Evaluating

print("\n\nLinear Regression SCORE : ", score(Y_pred_linear, actual_cost))

Output :

Linear Regression SCORE : 0.7123640090219118

StackingCVRegressor

#####################################################################

#Stacking Ensemble Regression

###########################################################################

#Importing and Initializing the Regressor

from mlxtend.regressor import StackingCVRegressor

#Initializing Level One Regressorsxgbr = XGBRegressor()

rf = RandomForestRegressor(n_estimators=100, random_state=1)

lr = LinearRegression()

#Stacking the various regressors initialized before

stack = StackingCVRegressor(regressors=(xgbr ,rf, lr),meta_regressor= xgbr, use_features_in_secondary=True)

#Fitting the data

stack.fit(X_train,Y_train)

#Predicting the Test set results

y_pred_ense = sc.inverse_transform(stack.predict(X_test))

#Evaluating

print("\n\nStackingCVRegressor SCORE : ", score(y_pred_ense, actual_cost))

Output :

StackingCVRegressor SCORE : 0.827546146596208

Conclusion

We can see a slight improvement in the score of StackingCVRegressor compared to the individual algorithms, however, this may not always be the case. StackingCVRegressor may turn out to be less or more efficient in terms of accurate predictions than individual algorithms depending on the data and the level one regressor used. One thing which can be certain though is that the predictions from StackingCVRegressor can be deemed stable and is expected to show less variance due to the very fact that it combines the skills of a variety of algorithms.