As machine learning evolved, the conventional way of solving problems observed a diminishing shift. ML offered novel ways to tackle real-world problems with its methods and algorithms. With ML providing the ability for computers to learn from data, the problem could be analysed with different perspectives quickly.

ML takes account of the optimisation technique in linear programming. This means that ML is generally considered as an optimisation problem. Concepts such as regression help with establishing a relationship between the vast amounts of data required for learning. In this article, we will analyse two extensions of linear regression known as ridge regression and lasso, which are used for regularisation in ML.

How Regression Analysis Impacts ML

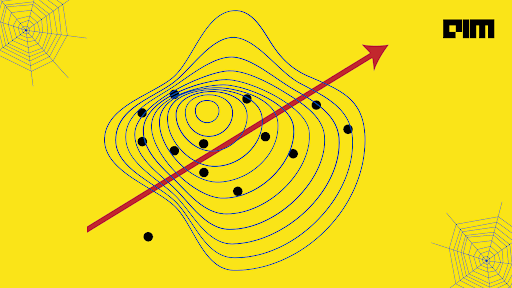

Regression is basically a mathematical analysis to bring out the relationship between a dependent variable and an independent variable. This analysis is used in the supervised learning technique of ML. Therefore, in the context, it establishes the relationship between features in a model (the independent variables) and labels (the dependent variables).

Regression helps in deriving a loss function or cost function for algorithms in ML. This is essential since loss functions determine the training accuracy in the ML model for the problem. So, here we discuss the linear regression models which are quite frequently used in ML. Linear regression (LR) is one of the simplest methods in regression. It involves determining linear relationships between continuous variables/data. It is broadly classified into two types, simple LR and multiple LR.

In the case of simple LR, there is one dependent variable and one independent variable whereas multiple LR has multiple independent variables that affect a single dependent variable.

As mentioned earlier, LR is used in supervised learning. Supervised learning works with labelled data and comes across features that decide which pre-set label the data falls into, in a model.

Ridge Regression

In a multiple LR, there are many variables at play. This sometimes poses a problem of choosing the wrong variables for the ML, which gives undesirable output as a result. Ridge regression is used in order to overcome this. This method is a regularisation technique in which an extra variable (tuning parameter) is added and optimised to offset the effect of multiple variables in LR (in the statistical context, it is referred to as ‘noise’).

Ridge regression essentially is an instance of LR with regularisation. Mathematically, the model with ridge regression is given by

Y = XB + e

where Y is the dependent variable(label), X is the independent variable (features), B represents all the regression coefficients and e represents the residuals (the extra variables’ effect). Based on this, the variables are now standardised by subtracting the respective means and dividing by their standard deviations.

The tuning parameter is now included in the ridge regression model as part of regularisation. It is denoted by the symbol ƛ. Higher the value of ƛ, the residual sum of squares tend to be zero. Lower the ƛ, the solutions conform to least square method. In simpler words, this parameter decides the effect of coefficients. ƛ is found out using a technique called cross-validation. (More mathematical details on ridge regression can be found here).

Lasso

Least absolute shrinkage and selection operator, abbreviated as LASSO or lasso, is an LR technique which also performs regularisation on variables in consideration. In fact, it almost shares a similar statistical analysis evident in ridge regression, except it differs in the regularisation values. This means, it considers the absolute values of the sum of the regression coefficients (hence the term was coined on this ‘shrinkage’ feature). It even sets the coefficients to zero thus reducing the errors completely. In the ridge equation mentioned earlier, the ‘e’ component has absolute values instead of squared values.

This method was proposed by Professor Robert Tibshirani from the University of Toronto, Canada. He said, “The Lasso minimises the residual sum of squares to the sum of the absolute value of the coefficients being less than a constant. Because of the nature of this constraint, it tends to produce some coefficients that are exactly 0 and hence gives interpretable models”.

In his journal article titled Regression Shrinkage and Selection via the Lasso, Tibshirani gives an account of this technique with respect to various other statistical models such as subset selection and ridge regression. He goes on to say that lasso can even be extended to generalised regression models and tree-based models. In fact, this technique provides possibilities of even conducting statistical estimations.

Comments:

In the case of ML, both ridge regression and Lasso find their respective advantages. Ridge regression does not completely eliminate (bring to zero) the coefficients in the model whereas lasso does this along with automatic variable selection for the model. This is where it gains the upper hand. While this is preferable, it should be noted that the assumptions considered in linear regression might differ sometimes.

Both these techniques tackle overfitting, which is generally present in a realistic statistical model. It all depends on the computing power and data available to perform these techniques on a statistical software. Ridge regression is faster compared to lasso but then again lasso has the advantage of completely reducing unnecessary parameters in the model.