TensorFlow’s machine learning platform has a comprehensive, flexible ecosystem of tools, libraries and community resources. This lets researchers push the state-of-the-art developments in ML and developers easily build and deploy ML-powered applications.

At the recently concluded TensorFlow’s developer summit, along with TensorFlow 2.0, the team also announced open sourcing of TensorFlow Lite for mobile devices and two development boards Sparkfun and Coral which are based on TensorFlow Lite for performing machine learning tasks on handheld devices like smartphones.

With TensorFlow Lite it looks to make smartphones, the next best choice to run machine learning models.

TensorFlow Lite is TensorFlow’s lightweight solution for mobile and embedded devices. It enables on-device machine learning inference with low latency and small binary size.

TensorFlow Lite supports a set of core operators, both quantised and float, which has been tuned for mobile platforms.

Why Lite, Why Now?

There is an urge, now, amongst the makers of smart devices to cater to the growing desires of their users to have devices which are heavy on specs while light on duty; accelerated hardware devices.

Now the devices have human-like interactions, with vision and speech applications. TensorFlow Lite accommodates this lighter way of functioning via Android Neural networks API. This opens up a whole new paradigm of possibilities for on-device intelligence.

Be it information security or accelerated responses, machine learning models have shown great promise. And, what better way to deploy these models than TensorFlow?

Google has been using TensorFlow Lite for taking pictures on its flagship model Pixel. For Portrait mode on Pixel 3, Tensorflow Lite GPU inference accelerates the foreground-background segmentation model by over 4x and the new depth estimation model by over 10x vs CPU inference with floating point precision.

This was made possible with accelerating compute-intensive networks that enable vital use cases for the users.

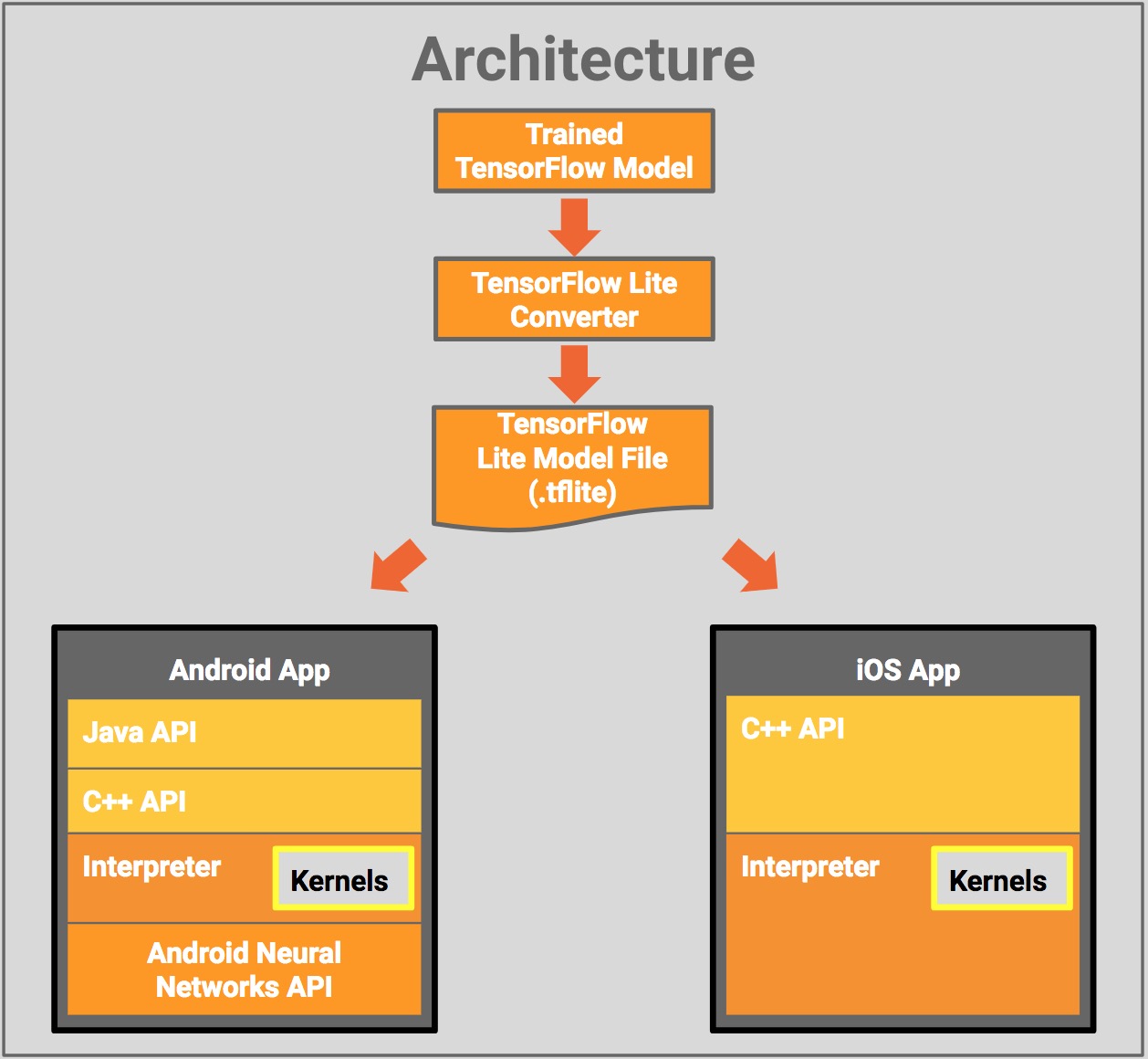

Source: TensorFlow Lite

The API for calling the Python interpreter is tf.lite.Interpreter

Converting TensorFlow Keras model to TensorFlow Lite model:

converter = tf.lite.TFLiteConverter.from_keras_model_file(keras_file)

The user can deploy pre-trained Tensorflow Probability models, Tensorflow KNN, Tensorflow K-mean model on Android by converting the TF models to TF Lite (guide), and the converted model can be bundled in the Android App

Key Takeaways From TF Lite Announcement

TensorFlow Lite:

- Run custom models on mobile platforms via a set of core operators tuned for this task.

- A new file format based on FlatBuffers.

- A faster on-device interpreter

- TensorFlow converter to convert TensorFlow trained models into Lite format.

- Using TensorFlow Lite cuts down the size of the models by 300 KB which allocates faster deployment.

Sparkfun Development Board:

- Uses extremely low power, less than 1mW in lot of cases.

- A single coin battery can run for many days.

- Runs entirely on-device

- Uses 20KB model

- Uses less than 100 KB of RAM and 80 KB of Flash.

Coral Development Board:

- Provides on-device machine learning acceleration.

- Uses Google Edge TPU, which does not depend on the network connection and can perform tasks like object detection under 15 ms. For instance, a shop floor personnel with no prior knowledge of machine learning can use the device just by training within seconds thanks to Edge TPU and perform object detection tasks.

- Runs inference with TensorFlow Lite.

The following state-of-the-art research models can be easily deployed on mobile and edge devices:

- Image classification

- Object detection

- Pose estimation

- Semantic segmentation

Check the TensorFlow Github repository here.