Generative Adversarial Network (GAN) has taken the machine learning world by storm. It is one of the most crucial and pathbreaking inventions in the world of neural networks in the last decade. It was proposed by Ian Goodfellow working with Yoshua Bengio at the University of Montreal. Goodfellow is now a part of a research team at OpenAI. Deep learning legend and Facebook’s AI research head Yann Lecun even went as far as to call adversarial training “the most interesting idea in the last 10 years in ML.” Now researchers at Open AI have come up with a technique using GANs to identify real handwriting from digital shapes, manipulate 3D objects and extract information from face datasets.

To explain in brief, GANs are a type of deep neural network architecture made from two neural networks, putting one network against the remaining network. This architecture has coined the new term “adversarial”. To put it more precisely in mathematical terms, GANs are a neural network framework which automatically learn from a probability distribution. Therefore, by training on an unlabelled dataset, a GAN can generate samples of the same kind which are not available in the training set.

Popular applications of GANs

GANs have already proven to be useful in a variety of scenarios. Every single day, a huge number of pictures are captured and shared on social networks. Many of them have human faces in it. Facebook worked on “in-painting technology” which replaces closed eyes with open ones. They are working on achieving this by using a variant of the above-mentioned GAN known as Exemplar Generative Adversarial Networks (ExGANs) — a type of conditional GAN that utilises exemplar information to produce high-quality, personalised in-painting results.

Another amazing application of GANs has been translating text to images. Researchers have been able to figure out how to use the rich descriptive nature of natural languages to churn out a suitable image that the text describes. This is an apt task for a powerful architecture like GAN as it leverages the GANs power to generate similar samples after seeing real data.

Information Theory And GANs

Information theory mathematically defines the concepts around the amount of randomness and information in a given system. Claude Shannon’s 1948 paper defined the amount of information which can be transferred in a noisy channel in terms of power and bandwidth.

A new research study conducted by Xi Chen and team proposes a GAN-styled neural network which uses information theory to learn “disentangled representations” in an unsupervised manner. The team is made up of researchers from Open AI and the University of California, Berkeley. They call the resulting neural network as InfoGAN which is a generative adversarial network that “maximises the mutual information between a small subset of the latent variables and the observation”.

In unsupervised learning, the “disentangled representation” comes in handy. They are the features which explicitly represent the most important attributes of a data point and hence can be very useful for future-relevant but unknown tasks. The researchers give an example of a dataset of faces which a very useful disentangled representation can be used to extract eye colour to the identity of the person. And this representation can be used in future tasks such as facial recognition and object detection.

Using the above concepts the researchers claim to make a “simple modification to the generative adversarial network objective that encourages it to learn interpretable and meaningful representation.”

Implementation And Results

The only way you can manipulate the generator output in a GAN is to play around with the noise input. And since noise is always random, there is little-known correlation between the noise and output. Here is where the above-explained concept of disentangled representations will help. The solution to this problem is to supply a “latent code”. This code has a predictable effect on the output. For categorical latent code, the researchers use softmax nonlinearity to represent the conditional distribution of the InfoGAN. For continuous latent code, the researchers found that factored Gaussian is sufficient to represent the conditional distribution.

The researcher also found that the objective functions of InfoGANs are faster than normal GANs, and therefore the benefits of InfoGANs come freely with any GAN.

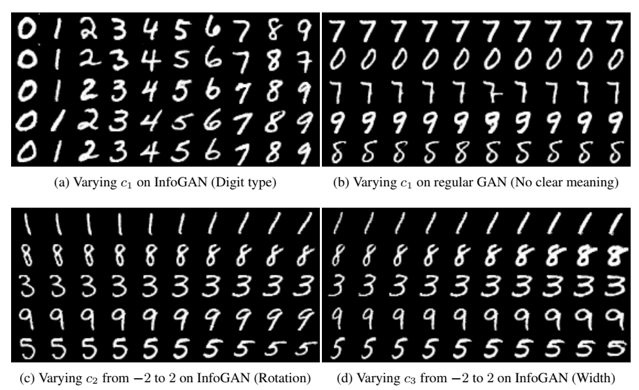

During an experiment, a researcher had put in place a 10-state discrete code to figure out the digital value. For comparison the researchers also trained a regular GAN alongside the InfoGAN. The training was done on handwritten digit MNIST datasets. For comparison, the researchers trained a regular GAN, which was similar. But in this case they did not use the regularisation term that maximises the mutual information.

The images show a process where a single noise vector is held constant (each row), and the latent code is manipulated (each column). Hence we can see that, in (a) in InfoGAN discrete code constantly changes the digit. In (b), in regularGAN no meaningful variation or change is seen. (c) and (d) show the continuous codes being changed for InfoGAN.

Hence we can clearly see that InfoGANs can really find attributes that haven’t been suggested to it in an unsupervised fashion.

Note: You can find the original research paper here and a great source for further reading here.