No two people in this world write in the same style, unless one decides to make a serious career out of forgery.

Even identical twins who share same genetics end up having different handwriting styles.

Every style is determined by the spacing between letters, slope of the letters, pressure applied and their thickness. Whereas, legibility in a style can also be driven by medical conditions.

Our scripts contain spelling errors and undecipherable styles; doctor’s prescriptions.

But we somehow manage to get the gist of it, no matter how bad the writing style is, through context and from our own experience of writing.

Now when a machine is tasked with identifying million styles in real time to give a relevant result and add to that, in multiple languages, it gets cumbersome.

Google’s Gboard does all of it and does it with great accuracy.

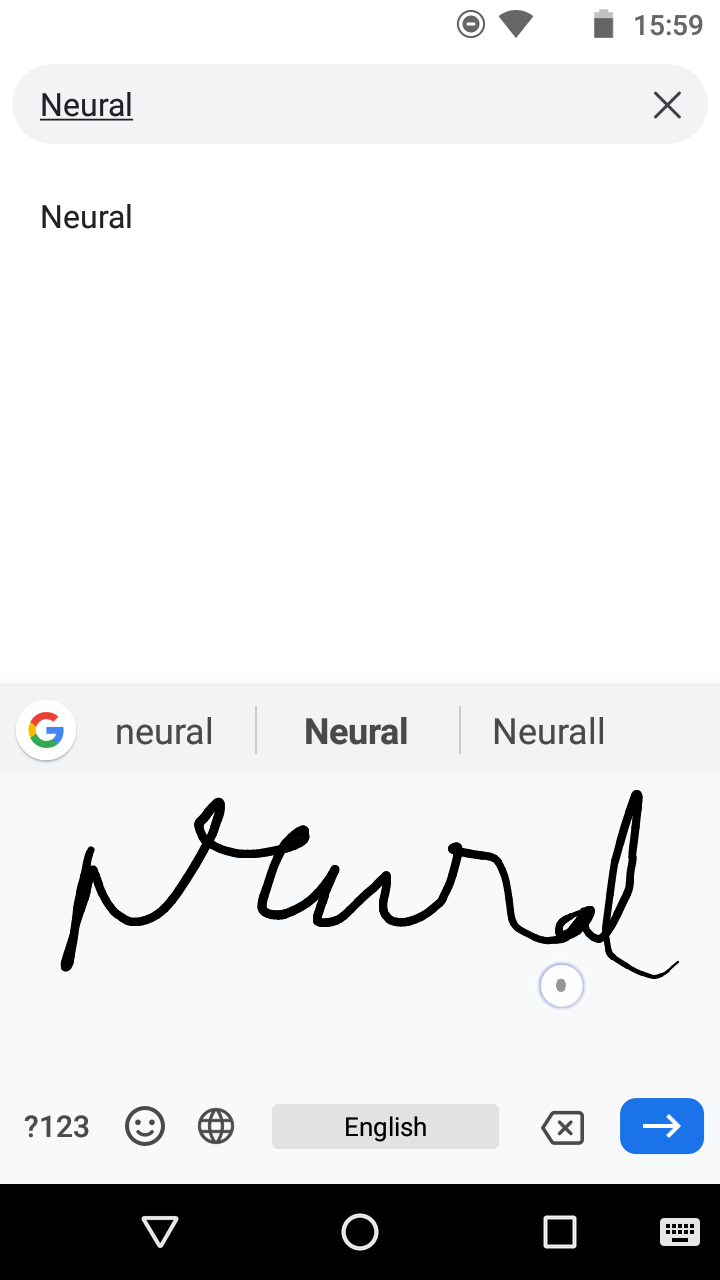

What does the above picture say- is it ‘rural’, ‘mural’, ‘weird’, ‘curd’? It can mean many things to most of the people or it can be some random scribbling.

But Gboard gets it with great accuracy:

In this recent paper, Google demonstrated how they used machine learning models to enable Gboard into predicting the right word for any style or language.

Fast Multi-language LSTM approach makes handwriting recognizers more flexible. The user can be using their keyboard on large screens or small screens, landscape or portrait mode, on devices of varying resolution and touch sensitivities.

How Does ML Help?

The first objective of the model is to normalise the touch-point coordinates to compensate for the varied resolutions.

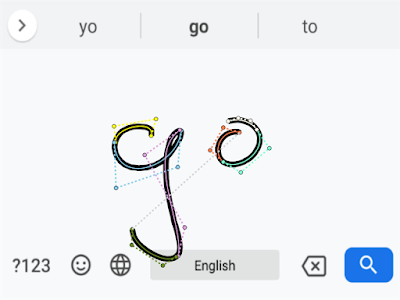

Writing on a smartphone screen usually involves cursive writing and getting the shape of these letters helps the model learn more accurately. In order to do this, the researchers at Google, convert sequence of points of the scribbling into a sequence of cubic Bezier curves.

Though Bezier curves are widely popular with fonts, this technique is different from the Google’s segment-and-decode approach used previously.

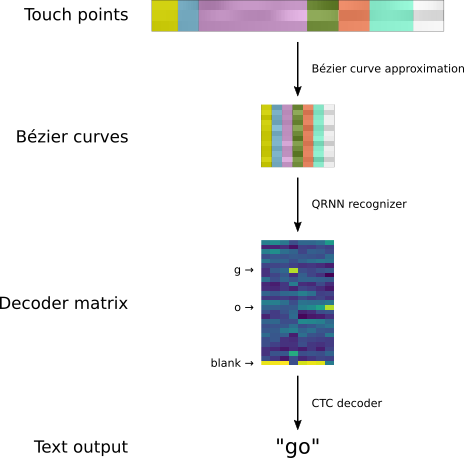

This data of Bezier curves is then fed into recurrent neural network(RNN).

The above figure is an illustration of curve fitting of the user’s input. The letter ‘g’ has two control points for each of the four cubic Bezier curves, which are represented by the colors yellow, blue, pink and green.

Now since the handwritten input is represented by Bezier curves, these sequence of input curves have to be translated back to actual written characters.

To process these curves, a multi-layer RNN is used, which produces an output decoding matrix.

This matrix contains the values of probability distribution of each input curve for a possible letter.

The rows contain the letters to ‘a’ to ‘z’ and the columns contain the probability distribution of the possible letters corresponding to the input curves.

Source: Blog by Google

In the word go, ‘g’ was represented by four cubic Bezier curves and ‘o’ by three.

To address this mismatch in representation and to get the actual number of characters in the input, Connectionist Temporal Classification algorithm. This algorithm is used for selecting the most probable labelling for a given input sequence.

These labeled outputs of the probability distributions of the possible letters are then decoded into letters with the help of a Finite State Machine Decoder.

This decoder combines the outputs of the neural network with a character-based language model. This model rewards commonly used sequences and penalizes uncommon usage of any sequence in the letters.

The variant of RNN used for this approach is the bidirectional version of quasi-RNN(QRNN).

Quasi: a switch between convolutional and recurrent layers; parallelization through small number of weights.

Smaller the weights, the smaller the size of the model that will be downloaded onto devices using Gboard. And, to reduce the delay in popping up results, recognition models are converted to TensorFlow Lite models.

Gboard now supports hundreds of languages from Mandarin to Malayalam and probably Maths, the language of nature.

Know more about the work here