The recent years have been marked by chip manufacturers entering the AI and Machine Learning fields. This is due to the market being pegged as one of the biggest since the PC market flourished in the early 2000s. Many prominent companies, including Nvidia and Intel, have already begun dipping their toes into the potential big fish, selling to enterprises and big companies looking to get into AI.

Until now, Nvidia has held the lead without question. After sinking a sizeable amount of R&D into researching training deep learning models, Nvidia has begun producing hardware that can be used specifically for training tasks. Previously, this task was done using their Quadro and Titan enterprise chips, with the mantle now being passed on to the TensorRT architecture in their latest generation of consumer GPUs.

However, Nvidia is not the only player in the field. In fact, Intel has been playing catch up with Nvidia for a while now, by acquiring a deep learning-based startup known as Nervana in 2016. Since then, it has been rumoured that they have been working on newer chips in order to capitalize on the ever-growing need for inference hardware. That rumour was clarified this year at CES, where Intel announced a dedicated chip for inference.

Now, the stage has been set for the next big showdown in computing between Intel and Nvidia. While they are fighting on two different sides of the same spectrum, future advancements in the state of AI might give the edge to one of them.

Learning vs. Inference: How Does Specialization Matter?

Utilising an AI model for any real-world application requires these procedures to be carried out first. Training is just what it sounds like, where the model is trained on a sample of the data it will eventually process. Using this, it can ‘learn’ the characteristics of the required data and store it in its ‘memory’. Post this, the model itself is stripped down so it can run without consuming too much computing power, and is then used for the purpose it was developed for.

Even as the model is stripped down, it still requires a considerable amount of compute to run itself. When the model then predicts results based on its training, this process is called inference. The fight now is between providing compute for both inference and learning, which are varied workloads and work well on specialized devices.

Learning tasks are more suited to parallelization, as they involve running multiple, varied operations at the same time. Nvidia has a natural advantage here, as its GPUs can be used to accelerate learning by distributing processing across CUDA cores. Moreover, they have also invested heavily into the compute unified device architecture, or CUDA, as a programming architecture. Due to Nvidia’s efforts, CUDA is not only integrated into multiple workspaces such as TensorFlow and Hadoop, but also used by cloud service providers such as Microsoft and Amazon.

Intel, on the other hand, has largely specialized in CPUs that offer unmatched performance for sequential workloads. Inference is a highly sequential workload, making it compute heavy on many devices. However, inference workloads largely take place on the ‘edge’, which is to say that they are not processed on servers. They are usually conducted on mobile devices, which typically don’t have the compute required to perform heavier inference workloads.

Some manufacturers have begun to get past this by manufacturing their own inference chips, such as Apple, Huawei and Samsung. However, experts predict that the next step in inference on devices will come from FPGAs and mobile ASICs.

The Transition On The Inside Of AI

FPGAs and ASIC are two of the biggest developing markets for AI learning and inference. While ASICs can be used for heavier inference and learning workloads, as seen by Google’s creation of the TPU, FPGAs can be used to provide these features on mobile devices. Strides are already being made towards this end by both giants.

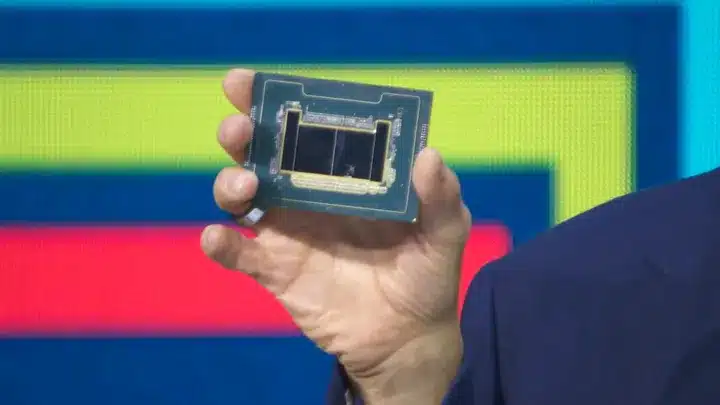

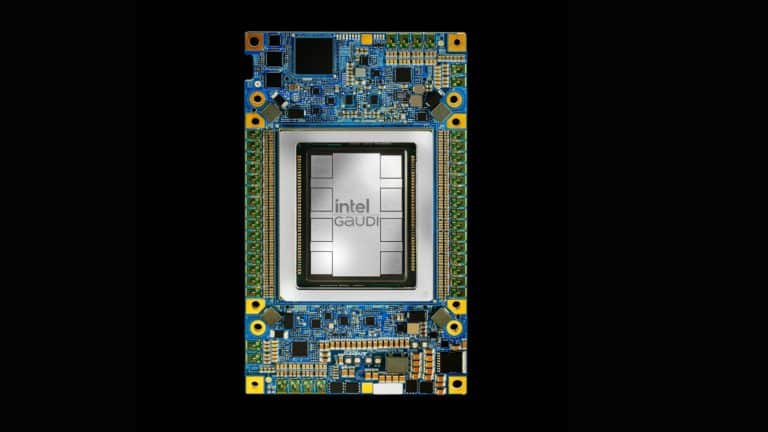

Intel released Nervana, an ASIC for inference and support for large amount of parallelization in server settings. It has also revamped the chip structure considerably, and built them on a 10nm manufacturing process. This is likely to be targeted at enterprise users, as seen by partnering with Facebook as a developer partner to ensure easy use of Nervana.

NVIDIA has also begun to dip its toes in the inference pool, with the release of its deep learning inference platform with a heavy focus on TensorRT. Reportedly, TensorRT delivers a 40x higher throughput in real-time latency. They have also mentioned that 1 of Nvidia’s GPU-accelerated servers is as powerful as 11 CPU-based ones.

The battlefield has been set for the next generation of compute power. The giants will duke it out, with the consumer being the biggest winner due to innovation driven by competition.