XGBoost, an abbreviation for eXtreme Gradient Boosting is one of the most commonly used machine learning algorithms. Be it for classification or regression problems, XGBoost has been successfully relied upon by many since its release in 2014. It is a library for implementing optimised and distributed gradient boosting and provides a great framework for C++, Java, Python, R and Julia.

The Reason Behind Its Popularity

XGBoost provides a number of features that can greatly impact the efficiency and performance of a model.

Parallelisation, distributed computing, cache optimisation, automatic handling of missing data are some of its features that stand out compared to other algorithms and libraries.

Another set of factors include the speed of execution and the performance of the model in both regression and classification type problems. The algorithm is most effective in producing a model with lesser variance and a more stable prediction.

Your First Program To Implement XGBoost In Python

We will be using a subset of the dataset given for the hackathon “Predicting House Prices In Bengaluru” at MachineHack.com. (Click here to download the complete dataset). You can also take a look at our latest hackathon Uber City Traffic Visualisation Challenge.

Installing XGBoost

Use the python pip installer to install the XGBoost library from your terminal.

pip3 install xgboost

Let’s get coding:

Importing the libraries

import numpy as np

import pandas as pd

Importing the dataset

dataset = pd.read_excel('House_prices.xlsx')

The data consists of features of Houses in locations across Bangalore. The problem is to predict the ‘price’ of the houses from ‘total_sqfeet’, ‘size’, ‘bath’, ‘balcony’, ‘area_type’ and ‘location’.

The data has been preprocessed to some extent. Click here for instructions on Data preprocessing in python.

Extracting the values and categorising the features to dependent and Independent Variables

X = dataset.iloc[:,[0,1,2,3,4,6]].values

y = dataset.iloc[:, 5].values

- X: Set of Independent features(‘total_sqfeet’, ‘size’, ‘bath’, ‘balcony’, ‘area_type’ and ‘location’).

- Y: Dependendent Feature (price)

Handling categorical Variables

The first three features ‘size’, ‘area_type’ and ‘location’ in the dataset consist of categorical values and hence is required to encode them to numbers.

We will use the label encoder to encode the features into numerical values.

from sklearn.preprocessing import LabelEncoder

le_X_0= LabelEncoder()

le_X_1= LabelEncoder()

le_X_2= LabelEncoder()

X[:, 0] = le_X_0.fit_transform(X[:, 0])

X[:, 1] = le_X_1.fit_transform(X[:, 1])

X[:, 2] = le_X_2.fit_transform(X[:, 2])

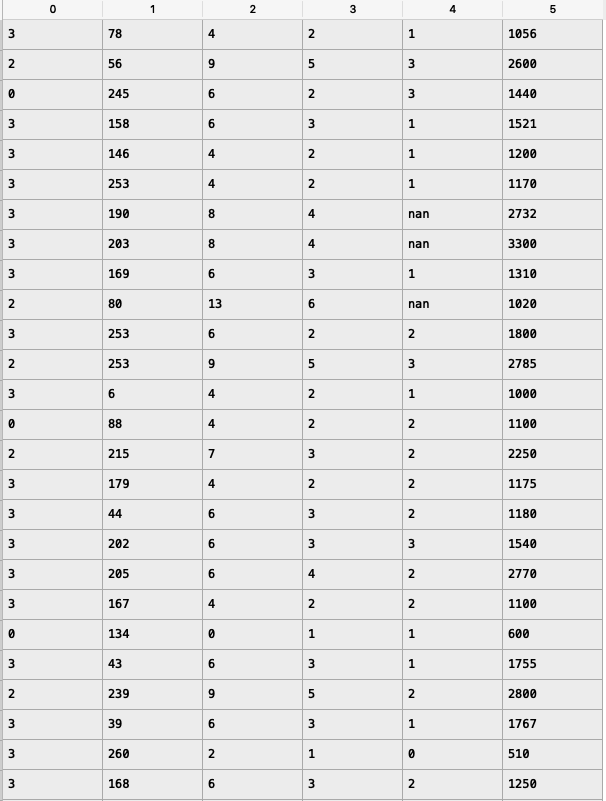

X after Label Encoding:

Splitting the data set into test and training set

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, Y, test_size = 0.2, random_state = 0)

The test set will consist of 20% of the data in the dataset while the training set will contain 80%.

Creating and initialising XGBoost Regressor

from xgboost import XGBRegressor

regressor = XGBRegressor()

Fitting the regressor with data

After initialising the regressor we need to fit the regressor with training data so that it can learn the correlations between the features to give an accurate prediction for new inputs.

regressor.fit(X_train, y_train)

Predicting the prices

Predicting for training set:

Y_pred_train = regressor.predict(X_train)

- The above code will give the X_tain (training set with independent features) as input to the regressor and predicts values for prices. The predicted values are stored in the numpy array Y_pred_train.

Predicting for test set:

y_pred = regressor.predict(X_test)

- The above code will give the X_test (test set with independent features) as input to the regressor and predicts values for prices. The predicted values are stored in the numpy array Y_pred

Evaluating accuracy with RMSLE

Calculating RMSLE

def rmsle(y_pred,y_test) :

error = np.square(np.log10(y_pred +1) - np.log10(y_test +1)).mean() ** 0.5

Acc = 1 - error

return Acc

print("Accuracy attained on Training Set = ",rmsle(Y_pred_train, y_train))

print("Accuracy attained on Test Set = ",rmsle(y_pred,y_test))

Output