This is a world more or less run on smart devices and safety has become the key feature for leading tech enterprises. There is now a push for complete transition into AI enabled devices for smarter, more efficient features. To pack these palm sized gadgets with the advantages ML brings in, the neural networks are compressed (quantization) to make them smaller.

Smaller models occupy less space but they are more vulnerable to attacks.

When the size of a deep learning model is cut down, errors like misclassification can occur due to an error amplification effect. This is something one never would have expected. It is counter intuitive. This effect gets more real as the number of layers increase. When quantization is carried on models with noise, the noise gets amplified resulting in undesirable output such as wrong labeling of classes in the images etc.

At smaller sizes, there is a tradeoff between memory and accuracy. So, a team at MIT has addressed this problem by demonstrating the effectiveness of controlling Lipschitz constraint in a network.

“Our technique limits error amplification and can even make compressed deep learning models more robust than full-precision models,” says Song Han, an assistant professor in MIT’s Department of Electrical Engineering and Computer Science and a member of MIT’s Microsystems Technology Laboratories.

Tweaking Quantization

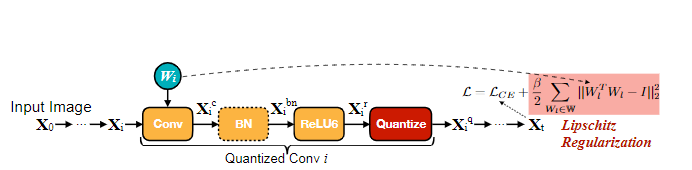

The researchers propose a method called Defensive Quantization(DQ). This not only mitigates noise but also controls the Lipschitz constant of the network. Lipschitz constant is used for analyzing the continuity of functions and the uniqueness of the outputs. Since neural networks are a bunch of mathematical operations at their core, controlling few mathematical constraints can open up new avenues for optimisation.

Significance of Lipschitz constant:

- Lipschitz loss means faster theoretical convergence of convex loss functions.

- A smaller value(less than 1) means lesser chances of gradient explosion.

Lipschitz constant of the network is the product of its individual layers and can grow exponentially(error amplification) when the value is more than 1. So, a regularization term (as shown below) is defined to keep the Lipschitz constant small.

Models quantized to 8 bits or fewer are more susceptible to adversarial attacks, the researchers show, with accuracy falling from an already low 30-40 percent to less than 10 percent as bit width declines. But controlling the Lipschitz constraint during quantization restores some resilience. When the researchers added the constraint, they saw small performance gains in an attack, with the smaller models in some cases outperforming the 32-bit model.

This method, DQ, tries to make the best out of both model quantization and adversarial defense approaches.

When DQ is combined with other defense methods like feature squeezing and adversarial training(GANs), the results only get better. Especially adversarial training, where the network learns to classify adversarial samples correctly.

As the demand for smaller network grows, the hardware accelerators will try to become more precise even at the smaller scale, this brings in more challenges as the trade-offs between accuracy, latency and model size fluctuate.

Key Takeaways Of Defense Quantization

- DQ can even improve the accuracy of the models which are quantized normally without any attack.

- Improving the safety of neural networks while maintaining the efficiency and robustness.

- Lipschitz regularization and quantization makes the model more robust..

- Deep learning models can now be deployed on mobile devices with more safety

Read more about the work here