Machine Learning has found widespread applications in almost every tech domain today, and has made a lasting impact in innovating the future. With ML tools and techniques growing day by day, it becomes difficult to assess the quality of problem-solving capabilities and practicality of ML. It may not always lead to expectations on a business scale. Thanks to ongoing research, evaluating the context and performance of machine learning is within reach.

When it comes to the performance of ML applications, they are not always looked deeper due to technical complexities along all the process. To address this problem, a new benchmarking software suite called MLPerf has been developed with the aim of “measuring the speed of ML software and hardware”. The software was developed by academics from Harvard University and Stanford University, in collaboration with major tech companies such as Google, Intel, AMD among others.

Measuring ML Performance

Since ML and AI applications are developing fast, it will necessitate the need for better hardware and software and also require a benchmarking platform for ML. This is where MLPerf comes in. In fact, the inspiration to develop this software came from two standard benchmarking entities, Standard Performance Evaluation Corporation (SPEC) and Transaction Processing Council (TPC), that evaluate computing and database system requirements respectively.

Previously, machine learning applications implemented in tech companies were measured as per their preference with the assistance of third parties. This will be avoided with MLPerf, wherein every tech company agrees to a consensus when conducting a ML performance analysis ranging from applications in mobile devices to cloud services.

Furthermore, MLPerf employs conventions used from SPEC & TPC as well as other benchmark methods such as DeepBench by Baidu and DAWNBench by Stanford University.

MLPerf platform has a set of goals:

- Accelerate progress in ML via fair and useful measurement

- Serve both the commercial and research communities

- Enable fair comparison of competing systems yet encourage innovation to improve the state-of-the-art of ML.

- Enforce replicability to ensure reliable results

- Keep benchmarking effort affordable so all can participate.

In order to get more people to work with MLPerf, the researchers have opensourced it so that it can be modified by the developer community for better results. It is available on GitHub. Since it is in initial stages — the release is dubbed alpha’ release by MLPerf and researchers aim to provide a stable software environment by the end of this year.

Areas Covered For Benchmarking

The software suite has seven areas of ML under its blanket to perform benchmarking tests.

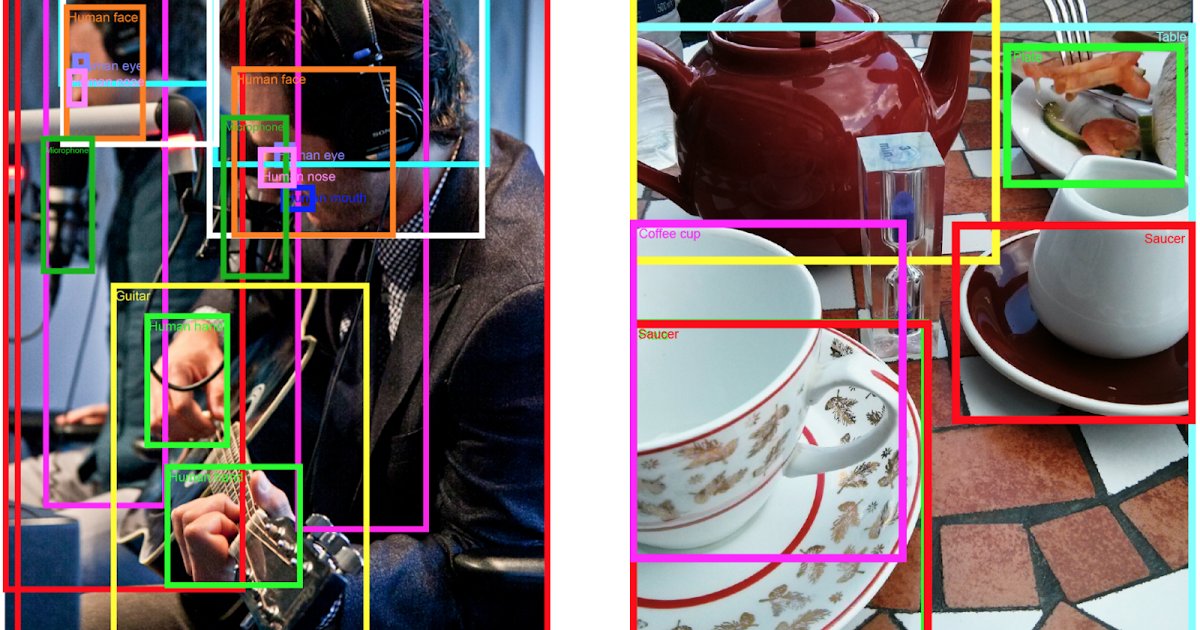

- Image classification

- Speech Recognition

- Object Detection

- Translation

- Recommendation

- Sentiment Analysis

- Reinforcement learning

For each of these areas, reference implementation are once again available on GitHub. Reference implementations mean that the reference code has been implemented in at least one framework and ML model. Based on the above criterion, a dockerfile is created for benchmarking in a container. In addition, the reference implementations also provide scripts( usually in Python programming language) for downloading datasets as well as for training these datasets. The necessary documentation for running these scripts and ML models are also given in the end.

The hardware used for initial testing consisted of 16 CPUs, a Nvidia P100 GPU, Ubuntu OS, 600 GB of disk space and Cpython (version 2 or later) for reference implementation. The benchmarking performance was tested on these reference hardware and found to be slightly sluggish. However, the developers suggest that it will improve over time with faster and optimised hardware.

Why Is Benchmarking Important For Machine Learning?

Tech companies invest a lot on hardware along with pouring funds on research projects. Therefore, it is essential that these investments do not go sour. To ascertain the viability of hardware, benchmarking is done. With tech companies competitively focussing on ML, it is time they incorporate a standard benchmarking method for assessing ML performance too. This way ML can be improved over time and prove beneficial in the long run.

Conclusion

MLPerf is just the beginning of a new benchmarking era in ML and AI. It plans to ignite more ML research and insights along the process. Consequently, it will lead to a larger, better ML community exploring and experimenting diverse areas in ML. On the other hand, MLPerf should make sure that the software suite is made more affordable and user-friendly.