Machine learning is an algorithm or model that learns patterns in data and then predicts similar patterns in new data. For example, if you want to classify children’s books, it would mean that instead of setting up precise rules for what constitutes a children’s book, developers can feed the computer hundreds of examples of children’s books. The computer finds the patterns in these books and uses that pattern to identify future books in that category.

Essentially, ML is a subset of artificial intelligence that enables computers to learn without being explicitly programmed with predefined rules. It focuses on the development of computer programs that can teach themselves to grow and change when exposed to new data. This predictive ability, in addition to the computer’s ability to process massive amounts of data, enables ML to handle complex business situations with efficiency and accuracy.

Traditionally, applications are programmed to make particular decisions, for example there may be a scenario based on predefined rules. These rules are based on human experience of the frequently-occurring scenarios. However, as the number of scenarios increases significantly, it would demand massive investment to define rules to accurately address all scenarios, and either efficiency or accuracy is sacrificed.

How Does Machine Learning Differ From Traditional Algorithms

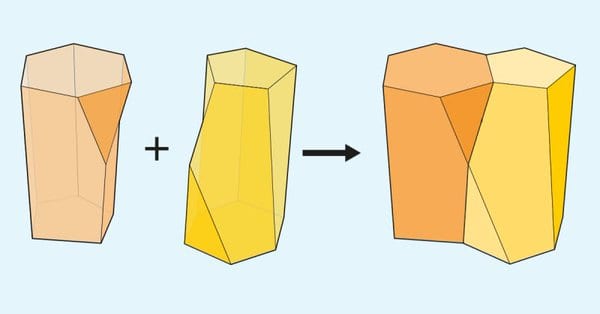

A traditional algorithm takes some input and some logic in the form of code and drums up the output. As opposed to this, a Machine Learning Algorithm takes an input and an output and gives the some logic which can then be used to work with new input to give one an output. The logic generated is what makes it ML.

ML Vs Classical Algorithms

- ML algorithms do not depend on rules defined by human experts. Instead, they process data in raw form — for example text, emails, documents, social media content, images, voice and video.

- An ML system is truly a learning system if it is not programmed to perform a task, but is programmed to learn to perform the task

- ML is also more prediction-oriented, whereas Statistical Modeling is generally interpretation-oriented. Not a hard and fast distinction especially as the disciplines converge, but in my experience most historical differences between the two schools of thought fall out from this distinction

- In classical algorithms, statisticians emphasis on p-value more and a solid but comprehensible model

- Most ML models are uninterpretable, and for these reasons they are usually unsuitable when the purpose is to understand relationships or even causality. The mostly work well where one only needs predictions.

- Traditional learning methodologies such as training a model-based on historic training data and evaluating the resulting model against incoming data is not feasible as the environment is in a constant change.

- As compared to the classical approach, traditional ML approaches as in most cases these approaches are too expensive within web scale environments and their results are too static to cope with dynamically changing service environments

- As opposed to classical approach, spending a lot of computational power on learning a very complex model of a highly dynamic network environment is not cost-effective

- Gradually, “statistical modelling” will move towards “statistical learning” and employ good parts about and creating tools for better interpreting the models in the process, Pekka Kohonen, assistant professor at the Karolinska Institutet pointed out

- One of the key differences is that classical approaches have a more rigorous mathematical approach while machine learning algorithms are more data-intensive

In the last two decades, there has been a significant growth in algorithmic modeling applications, which has happened outside the traditional statistics community. Young computer scientists are relying on machine learning which is producing more reliable information. Unlike traditional methods, prediction, accuracy and simplicity are in conflict.