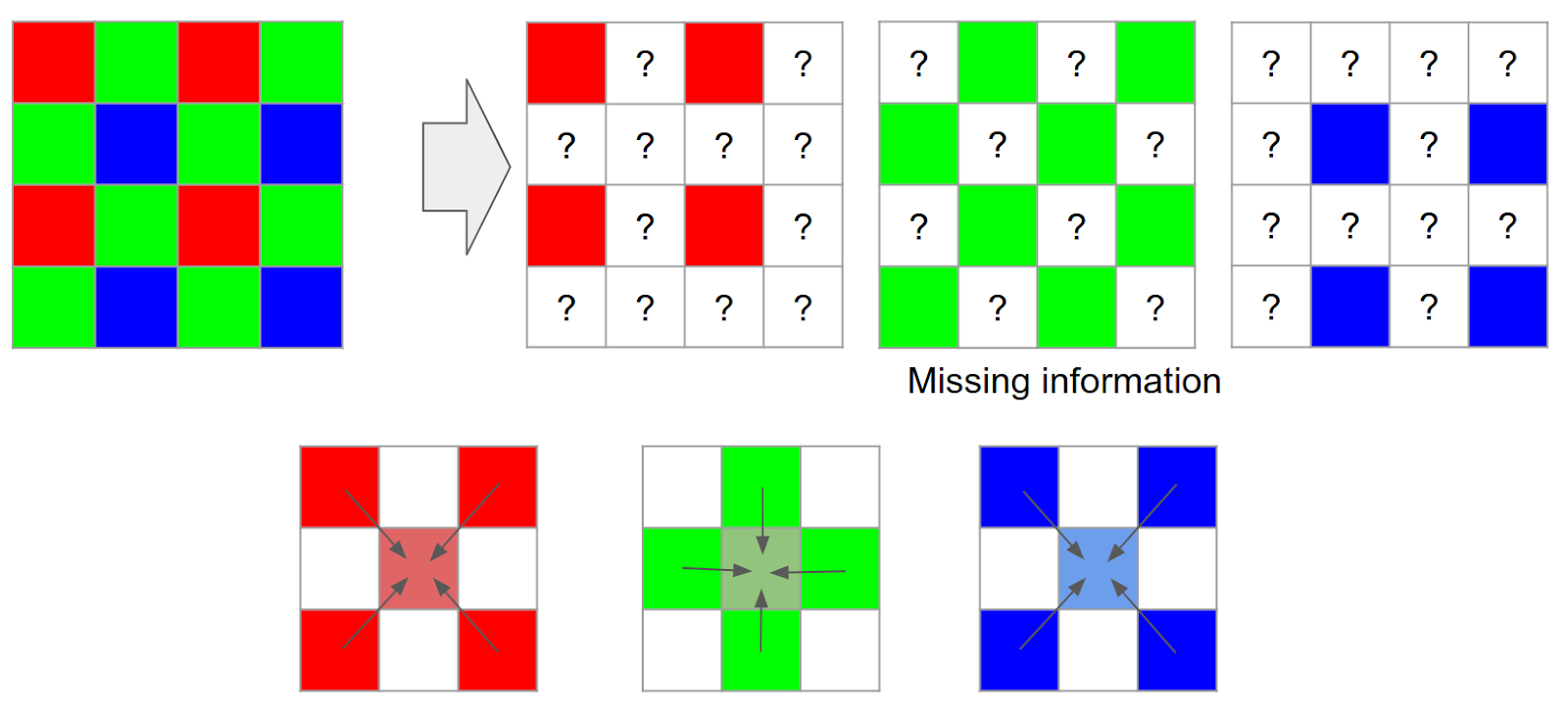

While you are busy zooming in and out to look at a picture more carefully on your smartphone, digital zooming tries to maintain the resolution by running algorithms in the background to fill in the missing pieces (pixels) of the image. Most of these image-up scaling techniques deploy machine learning where models learn mapping (selecting filters) by training on corresponding low and high-resolution images. In the learning stage, these low and high-resolution pairs of image patches are nothing but fabrications of the original images. When you zoom in, say 2x times, then, the size of a typical high-resolution patch is 6×6 and that of the synthetically down scaled low-resolution patch is 3×3.

Why Wasn’t Super Resolution Popular Until Now

- Photographers spend most of their time manipulating settings; for example, adjusting ISO sensitivity on their DSLR for a cleaner image. But, a typical phone user wants to have high-quality photos with a single touch. We don’t want to get just a higher resolution noisy image but to develop algorithms that produce less noisy results.

- Localized alignment issues demand the algorithm to be smart enough to perfectly estimate the motion due to unsteady hand movement, moving people and other factors as discussed above.

- The complexity involved in interpolating randomly distributed data; random because the movements are random. So, the data is both dense and scanty and, this irregular spread of data on the grid makes super-resolution more elusive.

Usually, up scaling involves methods like nearest-neighbours, bi-linear or bi-cubic interpolation. These up scaling filters with linear interpolation methods have their limitations in reconstructing images involving complexity and end up with over-smoothening of the edges.

Google has been doing the same with its RAISR (Rapid and Accurate Image Super-Resolution) for its image sharpening and contrast enhancement in their flagship model, Pixel.

Before RAISR, there were models like Anchored Neighborhood Regression (ANR) which computed the compact representation of each patch over the learned dictionaries with a set of pre-computed projection matrices (filters). Then there was SRCNN which built upon deep Convolutional Neural Network (CNN) and learnt mapping through hidden convolutional layers.

RAISR imbibes a learning-based framework which surpasses restoration capabilities of both SRCNN and ANR.

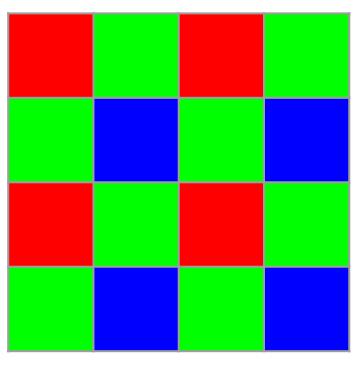

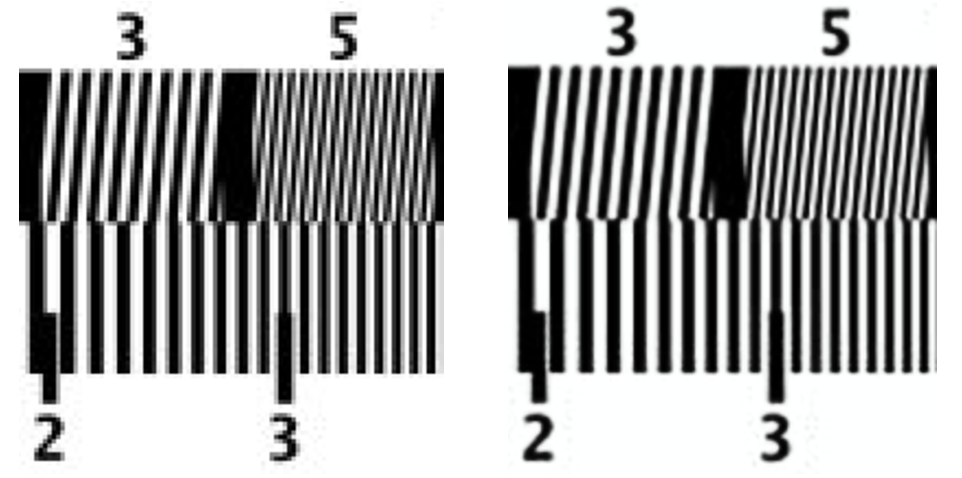

The idea behind RAISR is to enhance the quality of, say, bi-linear interpolation method by applying a set of pre-learned filters on the image patches, chosen by an efficient hashing mechanism. Here, the hashing is done by estimating the local gradients’ statistics. You can see that every 2×2 grid in the picture below contains two green blocks. This pattern considers the human’s optical sensitivity to the colour green.

The camera sensor elements measure the intensity of the incoming light and not the colour itself. As shown in the picture above, an RGB filter (Bayer pattern) array is placed in front of this sensor so that each pixel will be filtered to record one of the three colours. These raw Bayer pattern images are then run through demosaicing algorithms to interpolate RGB values for each pixel. These algorithms utilize intensity values of the surrounding pixels to estimate the value of the desired pixel.

Demosaicing is actually estimating pixel values using nearby values different methods like weighted averages amongst others. Since two-thirds of a zoomed-in image is actually reconstruction, usually we end up with partial information. This loss of information is prevalent even in DSLR cameras but it gets worse when you zoom an image on your phone screens.

How Does Google Address This Issue

With Burst photography, the algorithm tries to compensate for the loss of information in a single frame by taking multiple images. Using multiple frames to improve quality has been in use since Google’s attempt to capture images in a dimly-lit environment with their HDR+ algorithm.

Studies on super-resolution imagery with multi-frames for astronomical applications are more than a decade old in which the researchers elaborate on how multi-positional imaging improves the resolution of images on par with optical zooming.

“Over the years, the practical usage of this ‘super-res’ approach to higher resolution imaging remained confined largely to the laboratory. In astronomical imaging, a stationary telescope sees a predictably moving sky. But in widely used imaging devices like the modern-day smartphone, the practical usage of super-res for zoom in applications like mobile device cameras has remained mostly out of reach,” wrote researchers at Google in their blog.

The algorithm used in Google’s Pixel, generates the RGB value for every pixel by integrating the frames by using edge detection techniques and then adjusting the way pixels merge in alignment with the edges and not across it. The net effect is an efficient trade off between high resolution and noise suppression.

Using “ reference image” method, eliminates the ghosting and motion blurs in constantly moving bodies like an automobile or leaves of a tree.

With Pixel, Google manages to bring technology restricted to astronomy labs into the palms of a common man by innovating on standard machine learning methods.

What makes Pixel stand out is its ability to achieve all this with a single rear camera while its contemporaries make a trade-off with design and resolution to accommodate dual lens. This was made possible with the architecture that allows the lens to take two pictures at the same time, one from the left and one through the right, achieving depth perception similar to human optics.

AI-Assisted Photography

While digital zooming deploys state-of-the-art algorithms to paint the picture with some meaning, federated learning pushes the boundary further by sharing the information across the devices with sophisticated anonymity. Decentralizing the learning process makes the machine learning algorithms more robust and quicker. For example, while you capture Eiffel tower in low resolution, the models skims through the network for previously captured high-resolution images of the Eiffel tower and uses it to fill in for the missing information in the low-resolution picture. So every device personalizes a localized model by learning from interactions and patterns of its users and updates the whole decentralized network anonymously.

In addition to restoring resolution, these models allow the user to capture images at a lower resolution and save them by utilizing minimum space. And, super-resolve on demand without any degradation in quality.