The latest developments in artificial intelligence, especially in the applications of Generative Adversarial Networks (GANs), are helping researchers invade the final frontier of human intelligence. With new papers being released every week, GANs are proving to be a front-runner for achieving the ultimate — General AI (AGI). Machine learning is proving to be a great addition to the arsenal of financial modelling and, with advancements like GANs, the algorithmic trading sector is poised to reap benefits.

GANs have advanced to a point where they can pick up trivial expressions denoting significant human emotions. These dual-dueling networks seem to have saved something for the financial sector as well. Incentives and pitfalls are integral to any trading event. The winner in this scenario is the one who cuts on losses. So, what do GANs have to offer?

Generator And Discriminator

Before talking about GANs, it is essential to have an idea of their Generator and Discriminator networks and how these two go toe to toe with each other like arch-nemesis; benefitting the overall model eventually.

The Generator is responsible to produce a rich, high dimensional vector attempting to replicate a given data generation process; the Discriminator acts to separate the input created by the Generator and of the real/observed data generation process. They are trained jointly, with G benefiting from D incapability to recognise true from generated data, whilst D loss is minimized when it is able to classify correctly inputs coming from G as fake and the dataset as true.

Fine-Tuning Trading Strategies With cGAN

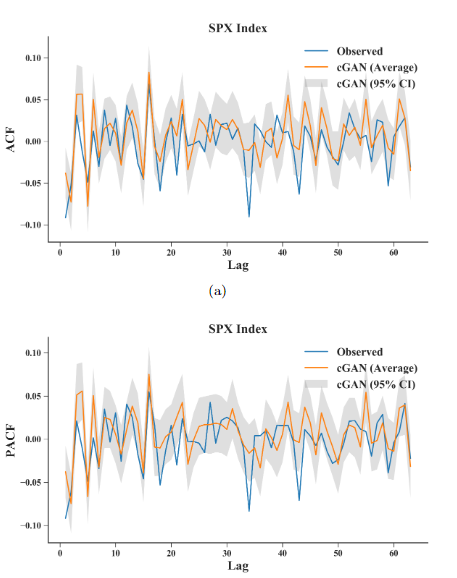

In this paper, the researchers show how the training and selection of the generator is made; overall, and, how it is less costly than the backtesting or ensemble modelling process. Firstly a conditional GAN is trained and then a generator G is used to draw samples from the time series.

Conditional GANs (cGANs) are an extension of a traditional GAN, when both G and D decision is based not only in noise or generated inputs but include an additional information set.

This additional information set contains labels like forecast of market value and other such features.

The dataset used for this experiment was obtained from Bloomberg, which contains a list of 579 assets tickers over a time period of 18 years from 03/01/2000 to 02/03/2018, with an average length of 4,565 data points.

Input features and target were scaled using a z-score function to ease the training process. The right cGAN was selected by taking snapshots every 200 iterations (snap= 200), drawing and evaluating 50 samples per generator along 20,000 epochs.

Stochastic Gradient Descent with a learning rate of 0.01 and batch size of 252 as the optimization algorithm, was considered after checking different architecture sizes for their performance across the benchmarks.

For every sample, one-split was performed to assess a set of hyperparameters. The base learners that are individually ”weak” (e.g. Classification and Regression Tree) but when aggregated can outcompete other .”strong” learners (e.g., Support Vector Machines) such as, Random Forest, Gradient Boosting Trees, etc., techniques that make use of Bagging, Boosting or Stacking.

A cGAN sample is drawn repeatedly to train a base learner. These base models are then returned as an ensemble.

The ensemble Mean Squared Error tend to be minimized, particularly when low bias and high variance base learners are used, such as deep Decision Trees. (You can check out the full algorithm here.)

Key Takeaways

- Creating proper validation sets and handling of time series through learning an unconditional model, similar to image and text creation.

- cGANs can be used for model tuning, bearing better results in cases where traditional schemes fail.

- Modelling of multiple financial time series.

- cGAN can be used to generate resamples of critical events for stress tests.

- Combining resamples from cGAN and Stationary Bootstrap can yield better results.

cGANs appear to be doing well when pitted against other validation strategies. The large scale deployment of GANs can only be guaranteed depending upon whether these results will be replicated in a real-world scenario or not.