Hardware forms the core aspect of AI applications. Apart from computing power, an efficient and quick mode of relaying information between network layers is equally important. This is where optical techniques are now explored by researchers. In fact, these optical systems can be aggregated into hardware elements such as GPUs.

This article discusses a particular research study by scholars from The University of California, Los Angeles (UCLA), where the team designed neural networks for two tasks, handwritten digit recognition and as an imaging lens.

An Optical Approach

The first record of a scientific study which had optical components for neural networks was in 2004. This study focussed on using a particular tool called matrix gratings for recognition through the Hopfield network. Later, other studies looked at the physical implementations which were conceived as programmable array logic devices or even as analogue computers.

However, these did not progress well and stalled for a while in research before one novel study tried exploring deep learning under optical systems. This research paper titled All-Optical Machine Learning Using Diffractive Deep Neural Networks, Xing Lin and team from UCLA construct and implement a physical neural network itself based on optical phenomena such as diffraction and interference. Termed as ‘diffractive deep neural network (D2NN)’, each neuron in the network layer is optically connected to another neuron in the subsequent layer with light waves.

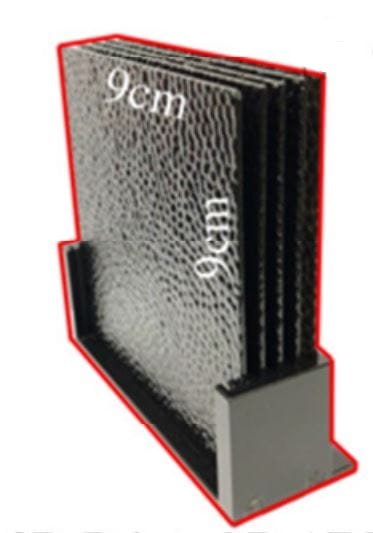

These are modulated with respect to amplitude and phase created by interference patterns of the previous layers as well as transmission/reflection coefficient, which form the ‘bias’ component of this network and influence the overall learning rates. D2NN is physically constructed through techniques of 3D printing and lithography. When tested, this framework functions at the speed of light in handling learning tasks efficiently.

Network Architecture And Implementations

As mentioned earlier, since D2NN works on diffraction, the network architecture consists of transmissive layers placed between the input and output layers. Every point in the transmissive layer is a neuron having a transmission coefficient. In fact, it is these coefficients that are trained to perform learning tasks at the speed of light.

An important factor taken here is when the secondary light waves pass through this architecture and undergo diffraction, the amplitude in the transmission coefficient of a neuron is assumed to be constant i.e, only phase term is the focus of study. So, this is achieved through error back-propagation algorithm based on stochastic gradient descent (SGD). A detailed account can be found here.Once the setup is ready and a physical model is obtained through a 3D printer, D2NN is trained and tested as a classifier as well as an imaging lens separately.

Handwritten Digit Classification

In this implementation, the neural network acts as a classifier for classifying handwritten digits. So, the 5-layered, 3D-printed D2NN is trained on approximately 55,000 images from MNIST handwritten digit database. Following the training process, this network is tested for 10,000 random images from the same MNIST dataset but those that were not considered in training.

An accuracy of 91.75 percent was achieved in this model. In addition, the network architecture focused the energy from the secondary waves efficiently into the detector region.

Imaging Lens

Another 3D-printed D2NN is now made to perform the task of producing unit-magnified image of the input. The training process used approximately 1500 images from ImageNet database. The underlying principle here is the trained network connects only amplitude in the neurons and layers which is again transmitted at the output.

Once trained this way, the network is tested for different objects illuminated by continuous wave radiation. This D2NN performed very well and correctly projected magnified images without much aberrations in the image.

Conclusion

D2NN is certainly a good start in achieving an exponential speed in executing machine learning at scale. But remember, researchers in this study had to use a powerful optical setup (3D printing and lithography) to run the network altogether. So, this is expensive and acts as a limitation for intelligent systems if deployed on a commercial scale. Only further research can tell the state of optical methods in deep learning.