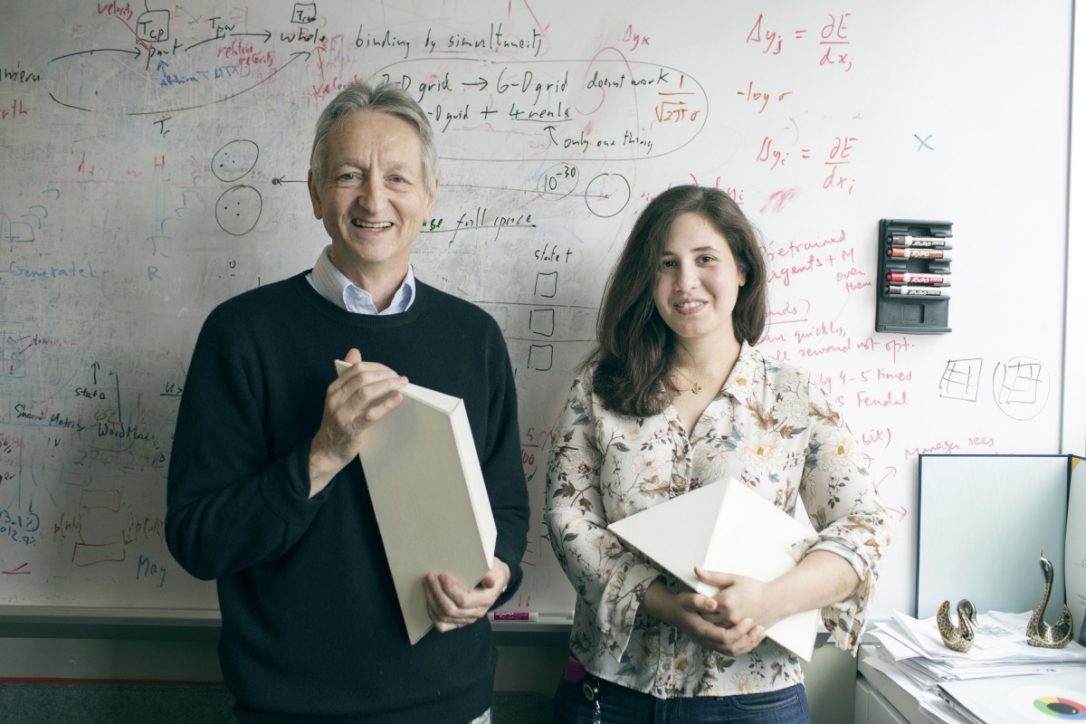

Deep Learning takes on a new direction with every research and this time Geoff Hinton has revealed a better and more reasonable architecture than Convolutional Neural Networks (ConvNets) – capsule network. The CapsNet is a new type of neural network architecture conceptualized by Hinton, with the idea to address some of the short comings of CNN. While it is an important and interesting research in Deep Learning, does it truly address the shortcomings of CNN — which is the workhorse of today’s deep learning? According to the paper, Dynamic Routing Between Capsules: “A capsule is a group of neurons whose activity vector represents the instantiation parameters of a specific type of entity such as an object or object part.”

Are Capsules An Improvement on CNNs – The Workhorses of Deep Learning

One of the major advantages of Convolutional Neural Networks is their invariance to translation. However, this invariance comes with a price and that is, it does not consider how different features are related to each other. For example, if there is a picture of a face, CNN will have difficulties distinguishing relationship between mouth feature and nose features. Max pooling layers are the main reason for this effect. Because when we use max pooling layers, we lose the precise locations of the mouth and noise and we cannot say how they are related to each other. Hinton himself stated that the fact that max pooling is working so well is a big mistake and a disaster.

On the other hand, capsule takes into account the existence of the specific feature that one is looking for like mouth or nose specifically. And this feature makes sure that capsules are translation invariant the same that CNNs are. So, capsules try to solve the drawback of max pooling layers through equivariance. Which means, instead of making the feature translation invariance, capsule make it viewpoint-equivariant, noted a researcher. So as the feature moves and changes its position in the image, feature vector representation will also change in the same way which makes it equivariant.

Hence, the major drawback of ConvNets is that it fails when images are transformed too. According to Max Pechyonkin, a deep learning enthusiast, CNN do not have this built-in understanding of 3D space, but for a CapsNet it is much easier because these relationships are explicitly modeled. The paper that uses this approach was able to reduce the error rate by 45% as compared to the previous state of the art, which is a huge improvement, he noted.

Here’s Hinton’s take on CNN and the simple mechanism from the paper in OpenReview — a vision system needs to use the same knowledge at all locations in the image but viewpoint changes complicate the effects on pixel intensities. The aim of capsules is to make good use of this underlying linearity, both for dealing with viewpoint variation and improving segmentation decisions.

Capsule Networks vs CNN

According to Danish developer, entrepreneur and mathematician Martin Jul, one of the key difference of capsules from CNN is that it is able to provide better generalization from limited training data.

- The first idea in CapsNet is to represent multi-dimensional entities. Capsule Networks does this by grouping these properties of a feature together

- The second is that one activates higher-level features by agreement between lower-level features (routing by agreement)

- The Capsule Networks partitions the image into regions subsets and for each of these regions, it assumes that there is at most one instance of a single feature, called a Capsule

- A Capsule is able to represent an instance of a feature (but only one) and is able to represent all the different properties of that feature, e.g., its (x,y) coordinates, its colour, its movement etc.

- The difference from Convolutional Neural Networks (CNNs) is that the Capsules bundle the neurons into groups with multi-dimensional properties, whereas in CNNs the neurons represent single, unrelated scalar properties.

Are Capsules The New Building Blocks In Deep Learning

According to a computer vision researcher Tobias Würfl, capsule net addresses the most obvious problem where Deep Learning falls short. “We really need to address the issue that we don’t encode our prior knowledge about viewpoint invariance into the networks. And the other issue is to automate architecture design in a fast and reliable,” he stated in a post.

Hinton had been researching on Capsule networks for a long time and he first proposed it in 2011, debunking backpropagation, the trusted method pioneered by him for neural networks back in the 1980s. Backpropagation met with huge success in the machine learning community and is regarded as path to achieving Artificial General Intelligence. It is a method for optimizing machine learning algorithms by adjusting weights so the algorithms can be perfected with the fewest possible errors.

Now, this new type of neural network architecture conceptualized by Godfather of “Deep Learning” comes closest to the observations of the human brain on the machine. And Hinton is placing all bets on this architecture to revolutionize computer vision. “Human vision ignores irrelevant details by using a carefully determined sequence of fixation points to ensure that only a tiny fraction of the optic array is ever processed at the highest resolution. In this paper we will assume that a single fixation gives us much more than just a single identified object and its properties,” he said.

By and large, Hinton proposes that the idea stemmed from the fact that neural networks needed better modeling of the spatial relationships of the parts. Instead of modeling the co-existence, disregarding the relative positioning, capsule-nets try to model the global relative transformations of different sub-parts along a hierarchy. This is the eqivariance vs. invariance trade-off, explained AI researchers.

Capsnet – A Leap Forward In Deep Learning

AI researchers have pegged this as the most important and interesting research directions in Deep Learning right now. But the best implementation of this architecture is yet to emerge. Here’s why the DL community believes it is a big breakthrough because the new architecture achieved a significantly better accuracy on the small NORB data set then the state-of-the-art CNN, reducing the number of errors by 45%. The OpenReview paper cites it to be significantly more robust to white box adversarial attacks than a baseline CNN. According to Hinton, the current implementation of capsule networks is slow but over a period of time, it can spark a major leap forward in AI.