AI-based hearing aids are now replacing the earlier model of Bluetooth Low Energy (BLE)- enabled hearing aids. In this article, we discuss how these hearing aids, backed by AI some use cases of artificial intelligence in hearing devices.

What Has Been Done Till Now

In general, a hearing device includes a sound classification module to classify environmental sound sensed by a microphone. SoundSense Learn is a feature that uses machine learning and artificial intelligence technique to apply user input for efficient optimisation of hearing aid parameters. This novel approach of machine learning is designed to individualise hearing aid parameters in a qualified manner, sampling a number of possible settings for the adjustment of hearing aid according to the user’s preference with a greater degree of certainty.

Dr Chris Heddon of Resonance Medical in a report pointed out that there are specifically three major elements that make up the AI-powered hearing aid:

- Hearing aids with energy-efficient wireless connectivity, which gives them access to external computing power

- The ability of hearing care professional to securely program a hearing aid from distance, which gives the user access to the highest level of hearing care at all times- even in real-world environments

- Mobile phones with sufficient computing power to run AI on device, which support the dynamically responsive intelligent hearing aids and provide the additional benefits of protecting user privacy and reducing the mobile device power consumption associated with cellular connection to a cloud-based server (which would have been needed if the AI was run in the cloud rather than on the user’s mobile device).

In another research, conducted by the researchers at Aalborg University, the researcher developed an efficient algorithm by using artificial neural networks that can help the end-users to not only hear but also to take part in conversations in the noisy environment. The applicability of this new algorithm is much advanced and stronger than the previous technology because of its ability to function in unknown environments with unknown voices.

Researchers concluded that the key success of this algorithm is its ability to learn from data and construct strong statistical models that are able to represent the complex listening situations.

The method used for creating the algorithm is the deep learning technique and the project was constructed with two different tracks. Firstly, they constructed an algorithm to solve the challenges of one-to-one conversations in noisy spaces by amplifying the sound of the speaker while reducing noise significantly without any prior knowledge about the listening situation. Secondly, speech separation was done by an algorithm that separate voices while reducing noises.

Recent Use Cases

- Last month, Microsoft announced that Skype and PowerPoint will include real-time captions and subtitles by early 2019 that will be based on artificial intelligence techniques. The motive behind adding the new feature into these two is to allow the people with hearing impairment for easily go with the audio of Skype calls and presentations in PowerPoint.

- Another instance is the real-life used case, Livio AI is the world’s first hearing aid that provides both superior sound quality as well as ability to track body and brain health that is featured with integrated sensors and artificial intelligence techniques.

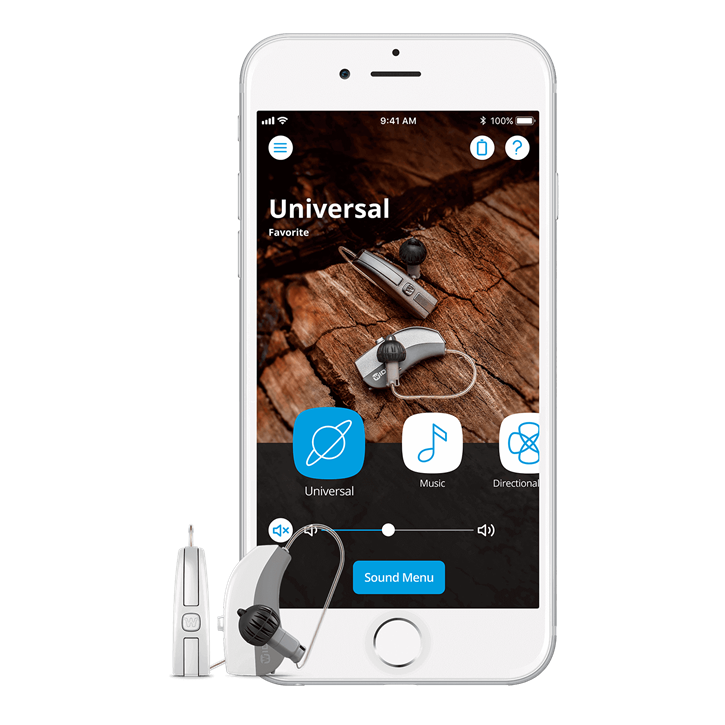

- Last year, Widex, a Danish hearing aid maker launched the world’s first machine learning hearing aid technology, Evoke where the sound quality evolves in real life.

The device gives the ability to the users to employ real-time machine learning that allows the hearing aid to learn from the user’s input and preferences. Evoke’s smartphone AI app, SoundSense Learn is designed to help the users adjust the sound of the hearing aid according to the moment. According to Widex, the app gathers a variety of anonymous data like the most frequent sound presets, how many times the volume is being adjusted, etc.

The device gives the ability to the users to employ real-time machine learning that allows the hearing aid to learn from the user’s input and preferences. Evoke’s smartphone AI app, SoundSense Learn is designed to help the users adjust the sound of the hearing aid according to the moment. According to Widex, the app gathers a variety of anonymous data like the most frequent sound presets, how many times the volume is being adjusted, etc.