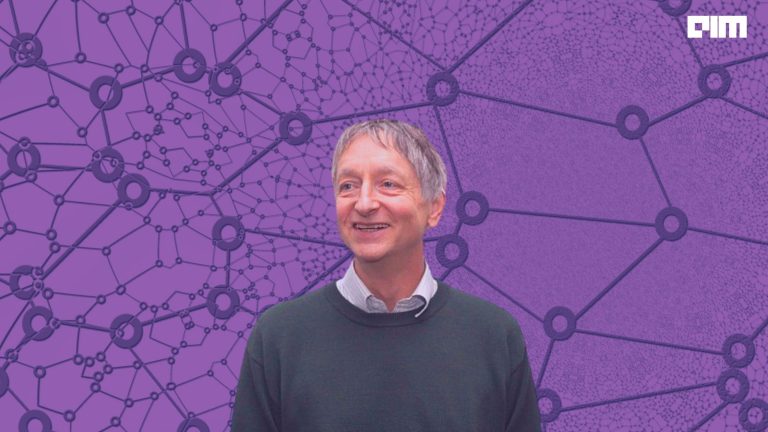

It was the go-to method of most of advances in AI today. But now one of the most powerful artificial neural network techniques, the Back-propagation algorithm is being panned by AI researchers for having outlived its utility in the AI world. The rallying cry in fact has been taken up by University of Toronto’s Geoff Hinton – father of Deep Learning who had himself pioneered and championed the breakthrough method.

Does Back-propagation truly capture the way the brain works?

However, there’s more to it – even though the Back-Propagation algorithm has been the method that underlies most of the advances we are seeing in the AI field today, there is no real evidence that the brain performs backpropagation. In other words, a research paper from the vaunted Montreal Institute for Learning Algorithms (MILA) discusses the biological plausibility of back-propagation — the back-propagation computation (coming down from the output layer to lower hidden layers) is purely linear, whereas biological neurons interleave linear and non-linear operations. Hinton’s doubts are grounded in the skepticism that will back-propagation method ever achieve the original goal for neural networks – which is building specifically autonomous learning machines.

In back propagation, labels or “weights” are used to represent a photon in a brain-like neural layer. The weights are then adjusted and readjusted, until the network can perform an intelligent function with the least amount of errors. According to Hinton, to get to where neural networks are able to become intelligent on their own, he asserts that there has to be another way to learn than by forcing solutions to use supervised data. “I don’t think it’s how the brain works,” he said. “We clearly don’t need all the labeled data.” In other words, back propagation is not really serving the purpose for which Neural Nets were built – mimicking the human brain.

Popular unsupervised learning algorithms such as the Boltzmann machine learning algorithms are closest to the most biologically plausible learning algorithms. However, Boltzmann machine learning algorithms also come with their own set of challenges related to the weight transport problem and how to achieve symmetric weights.

Unsupervised Learning – Future of AI

So does the problem lie in requiring too much training data? A widely known problem with Deep Learning models that use backpropagation is the huge volumes of labelled data required for training. Most AI researchers find labelling data a long, tedious job which is not very scientifically challenging. In fact, in most universities, the data labelling task is assigned to interns who spend the summer annotating images. Or researchers download labelled data to test models without understanding the effort gone into preparing those datasets.

Unsupervised Learning gained traction in 2012 when Coursera co-founder Andrew Ng took over Google Brain and used a Deep Learning system that could identify cats in images. Many research papers have touted that unsupervised learning is the future of artificial challenges since it represents real world problems. Viewed in that context, researchers indicate that backpropagation truly isn’t enough and has to pave way for another breakthrough.

But why is Unsupervised Learning viewed as the go-to method in Deep Learning? It is a well-known fact that Deep Learning models require huge volumes of supervised datasets. And for each data there is a corresponding label. For example, the widely popular ImageNet database that has the formed the basis of research across the globe has 1 million images labelled by people.

Unsupervised Learning requires little data since the objective of unsupervised learning research is to pre-train a model – known as encoder to be used for other tasks. So far, supervised learning models have been reported to perform better than unsupervised learning models since they are usually trained for one application only – such as self-driving, object recognition (these algorithms are trained on video data). IBM Research’s Raghav Singh also emphasized this point in his Cypher Talk recently where he drove home the point how AI system is dependent on huge volumes of training samples to perform a single task wherein humans don’t need to go through a million examples to learn what an apple is, he surmised.

Hinton proposes a new approach to AI

It is apparent why the AI heavyweight Hinton is so quick to dispose his earlier work. If you dig into his old Stanford seminar, Hinton examined what neuroscientists have been saying over the ages – can the brain really do back-propagation. During the seminar, Hinton explored why neuroscientists think it is impossible to implement in the cortex. The famed computer scientist outlined a bunch of reasons why it is not possible for the brain to do a backprop:

a) Cortical neurons do not communicate real-valued activities (they send all or none spikes)

b) The neurons need to send two different types of signals Forward pass: output = activity = y & Backward pass: output = error deriv w.r.t input = dE/dx

c) Neurons do not have symmetrical reciprocal connections

d) The feedback connections do not even go back to the neurons that the feed forward connections came from

Recently, the neural network pioneer unveiled a new AI advancement that will not use large datasets. Publishing his findings in two research papers, he discussed a way to “learn classifier of objects without using huge volumes of data”. The proposed method will also enable researchers to view images from different angles, this new development can give a boost to current image recognition technology. Hinton proposed a Capsule method, a collection of small sets of neurons, categorized into layers for the identification of objects in video or image form. The capsules serve the purpose of identifying a particular feature of an image and recognize it from varying angles.