It is believed that Leonardo Da Vinci took more than a decade to paint a realistic version of Lisa del Giocondo which also happens to be the world’s most famous portrait ‘Mona Lisa’. Da Vinci worked through his adult life making sculptures and studying human anatomy. His eye for detail led to the most realistic reconstruction of human in a painting during the Renaissance, which can be observed in the way he played with light and shadows to create the illusion of bone structure. Now after more than four centuries, a different kind of reconstruction has been introduced. Super realistic portraits are already a thing of the past.

Now, how about making the subjects in the portraits to move, to speak and to emote?

The authors of this paper who also are the researchers at Samsung AI, Moscow, have used machine learning algorithm do exactly the same and the results look promising. One such example can be seen at the beginning of this article.

With the publication of this paper in 2014, applications of GANs have witnessed a tremendous growth.

The Generative-Adversarial networks have been successfully used for high-fidelity natural image synthesis, improving learned image compression and data augmentation tasks.

GANs have advanced to a point where they can pick up trivial expressions denoting significant human emotions.

A Brief On The Architecture

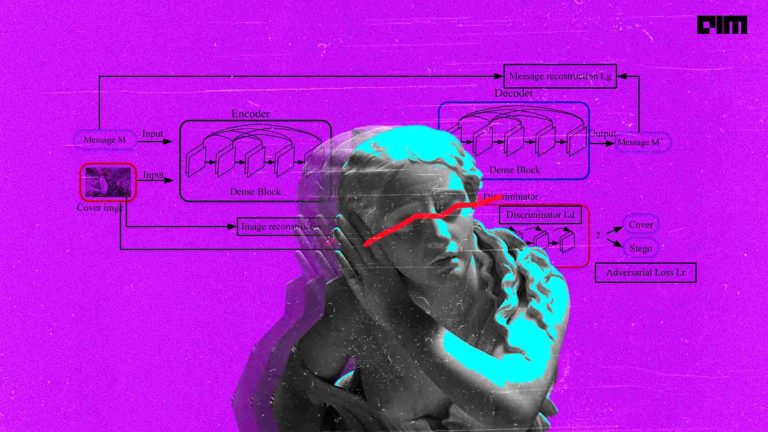

Few shot learning is a popular technique in computer vision applications to classify data/images by using few to one example of the target subject. For instance, there is only one example of the portrait of Mona Lisa. So, to make the model embed a two dimensional half tilted face of Mona Lisa with realistic human expressions, a meta learning architecture, as can be seen below, was used.

It has an embedder network that maps head images (with estimated face landmarks) to the embedding vectors, which contain pose-independent information.

The generator network maps input face landmarks into output frames through a set of convolutional layers.

During meta-learning, a set of frames from the same video is passed through the embedder to predict adaptive parameters of the generator. Then, the landmarks of a different frame are passed through the generator, comparing the resulting image with the ground truth. Here, the objective function includes perceptual and adversarial losses, with the latter being implemented via a conditional projection discriminator.

For training the model, the talking head datasets, VoxCeleb1 and VoxCeleb2 were used.

Challenges Faced And The Future Of This Work

There are quite a few work being done in successfully reconstructing the facial features. While modeling faces is a highly related task to talking head modeling, the two tasks are not identical, as the latter also involves modeling non-face parts such as hair, neck, mouth cavity and often shoulders/upper garment.

“These non-face parts cannot be handled by some trivial extension of the face modeling methods since they are much less amenable for registration and often have higher variability and higher complexity than the face part. In principle, the results of face modeling or lips modeling can be stitched into an existing head video. Such design,however, does not allow full control over the head rotation in the resulting video and therefore does not result in a fully fledged talking head system;” wrote the authors in their paper.

In this work, the authors consider the task of creating personalized photo realistic talking head models, i.e. systems that can synthesize plausible video-sequences of speech expressions and mimics of a particular individual. This work has practical applications for telepresence, including video conferencing and multi-player games, as well as special effects industry.

Know about this work in detail here.