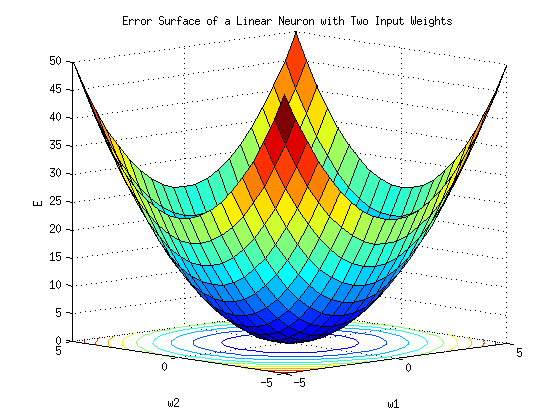

Gradient Descent is the most common optimisation strategy used in machine learning frameworks. It is an iterative algorithm used to minimise a function to its local or global minima. In simple words, Gradient Descent iterates overs a function, adjusting it’s parameters until it finds the minimum.

Gradient Descent is used to minimise the error by adjusting weights after passing through all the samples in the training set. If the weights are updated after a specified subset of training samples, or after each sample in the training set, then it is called a Stochastic Gradient Descent.

Stochastic Gradient Descent (SGD) and many of its variants are popular state-of-the-art methods for training deep learning models due to their efficiency. However, SGD suffers from many limitations that prevent its more widespread use: for example, the error signal diminishes as the gradient is back-propagated (i.e. the gradient vanishes); and SGD is sensitive to poor conditioning, which means a small input can change the gradient dramatically.

Learning rate is a parameter, denoted by α(alpha), is used to tune how accurately a model converges on a result (classification/prediction, etc.). This can be thought of as a ball thrown down a staircase. A higher learning rate value is equivalent to the higher speed of the descending ball. This ball will leap skipping adjacent steps and reaching the bottom quickly but not settling immediately because of the momentum it carries.

Learning rate is scalar – a value which tells the machine how fast or how slow it arrives at some conclusion. The speed at which a model learns is important and it varies with different applications. A super fast learning algorithm can miss a few data points or correlations which can give better insights on the data. Missing this will eventually lead to wrong classifications.

This momentum can be controlled with three common types of implementing the learning rate decay:

- Step decay: Reduce the learning rate by some factor every few epochs. Typical values might be reducing the learning rate by a half every 5 epochs, or by 0.1 every 20 epochs

- Exponential decay has the mathematical form α=α_0e^(−kt), where α_0,k are hyperparameters and t is the iteration number

- 1/t decay has the mathematical form α=α0/(1+kt) where a0,k are hyperparameters and t is the iteration number

There is no one stop answer to finding out the method in which hyperparameters can be tuned to reduce the loss; more or less a trial and error experimentation.

To bottle down on the values, there are few methods to skim through the parameter space to figure out the values that align with the objective of the model that is being trained:

- Adagrad is an adaptive learning rate method. Weights with a high gradient will have low learning rate and vice versa

- RMSprop adjusts the Adagrad method in a very simple way to reduce its aggressive, monotonically decreasing learning rate. This approach makes use of a moving average of squared gradients

- Adam is almost similar to RMSProp but with momentum

Whereas, Alternating Direction Method of Multipliers (ADMM) has been used successfully in many conventional machine learning applications and is considered to be a useful alternative to Stochastic Gradient Descent (SGD) as a deep learning optimizer.

ADMM And Alternatives

Adam is the most popular method because it is computationally efficient and requires little tuning. Other well-known methods that incorporate adaptive learning rates include AdaGrad, RMSProp and AMSGrad.

The use of the Alternating Direction Method of Multipliers (ADMM) has been proposed as an alternative to SGD.

Recently, the Alternating Direction Method of Multipliers (ADMM) has become popular with researchers due to its excellent scalability.

However, as an emerging domain, several challenges remain, including:

- The lack of global convergence guarantees,

- Slow convergence towards solutions, and

- Cubic time complexity with regard to feature dimensions.

To address these problems, the researchers at the George Mason University propose a novel optimization framework for deep learning via ADMM (dlADMM) to address these challenges simultaneously.

Here’s how dlADMM tries to solve few challenges:

- The parameters in each layer are updated backward and then forward so that the parameter information in each layer is exchanged efficiently.

- The time complexity is reduced from cubic to quadratic in (latent) feature dimensions via a dedicated algorithm design for subproblems that enhances them utilizing iterative quadratic approximations and backtracking.

- Experiments on benchmark datasets demonstrated that proposed dlADMM algorithm outperforms most of the comparison methods.

Know more about dlADMM here.