Linux has been around since the mid-90s. The operating system has since reached a user base that spans several organizations and countries. The OS is present in phones, cars, refrigerators, and more. Most importantly, the OS finds use for internet and supercomputers making scientific breakthroughs.

The operating system has been pretty renowned for managing hardware resources associated with desktop or laptop. Besides, it is one of the most secure and reliable operating systems available worldwide.

Years of extensive research on Linux has led to the development of several open-source tools for the Linux environment. Moreover, in the age of AI and automation, the present-day AI advances are geared towards creating software and hardware which can solve day-to-day challenges in areas such as healthcare, education, security, manufacturing, banking, and more. There are several AI-based tools available in the market today that are dedicated towards Linux applications.

Analytics India Magazine compiles a list of the most popular open source tools which support AI, and can be used for the Linux ecosystem. Most of these tools could possibly be used for many other operating systems, besides Linux.

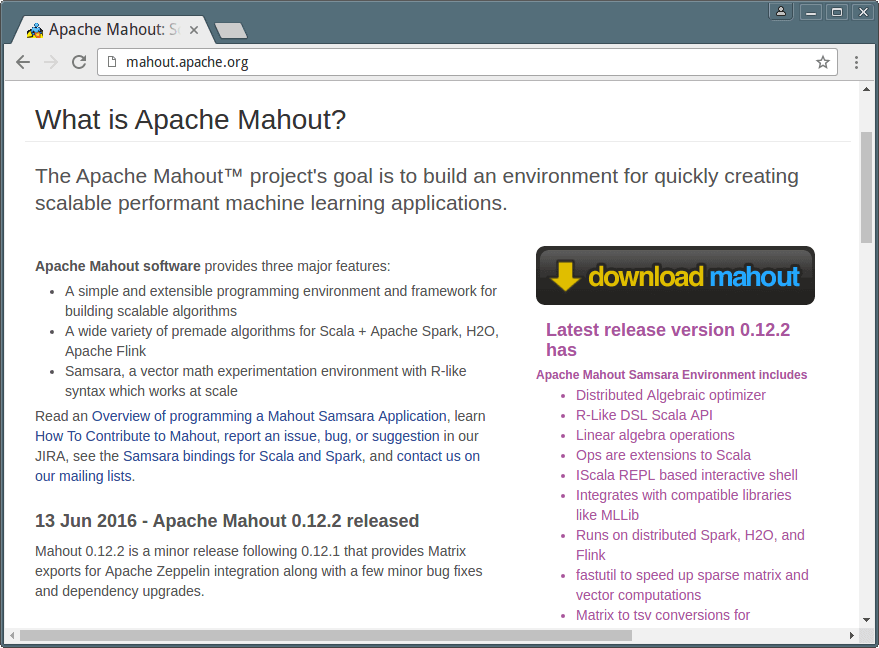

Apache Mahout

An open-source framework from Apache, Mahout is the application of Hadoop platform in the machine learning open source framework. It helps in building scalable machine learning applications, besides corresponding to MLlib.

Mahout has three primary features, as listed below:

- It provides simple and scalable programming environment and framework

- It also furnishes a plethora of prepackaged algorithms for Scala + Apache Spark, H20, as well as Apache Flink

- It Includes Samaras, a vector math experimentation workplace with R-like syntax, dedicated to matrix calculation

Link: http://mahout.apache.org/

Apache SystemML

The machine learning algorithm uses an open source platform for big data analysis. However, its primary feature is to support R language and the Python syntax; focus on the field of big data analysis; specifically for the high order mathematical calculation.

The tool’s biggest feature is the ability to automatically process assessment data, based on the framework of operation. Also, the user code should run directly on the drive or on Apache Spark cluster, which is determined according to the evaluation results.

Besides, Apache Spark, SystemML also extends support for Apache Hadoop, Jupyter, Apache Zeppelin, and other platforms. At present, SystemML technology has been successfully applied in many fields. Notable use cases include automotives, airport traffic, and social banking.

Link: http://systemml.apache.org/

Caffe

Caffe’s modular and expressive deep learning framework is based on speed, and the tool has been released under the BSD 2-Clause license. Interestingly, it’s already supporting several community projects in areas such as research, startup prototypes, and industrial applications in fields such as vision, speech, and multimedia.

Primary features of Caffe:

- Fast

- Easy to customize

- Strong expansion capability

- Rich community support

Caffe is primarily designed for neural network modeling and image processing tasks.

Link: http://caffe.berkeleyvision.org/

Deeplearning4j

This open source tool provides a distributed deep-learning library for Java and Scala programming languages. The tool requires the support of the Java Virtual Machine (JVM). The tool comes integrated with Hadoop and Spark on top of distributed CPUs and GPUs, and has been designed primarily for business related applications.

Deeplearning4j has opened up several algorithms to adjust the interface and the interface parameters to make a detailed explanation; which in turn allow developers to customize freely. Besides, the tool also supports matrix operations.

Moreover, DL4J was released under the Apache 2.0 license and provides GPU support for scaling on AWS. It has also been adapted for micro-service architecture.

Link: http://deeplearning4j.org/

H2O

The open source, fast, scalable, and distributed machine learning framework provides a large number of algorithms. It also supports smarter applications such as deep learning, gradient boosting, random forests, generalized linear modeling, and more.

The businesses oriented artificial intelligence tool enables users to draw insights from their data, using faster and better predictive modeling. The core code of this framework is written by Java.

Moreover, the tool focuses on large amounts of data to help enterprise users by providing fast and accurate prediction of the analysis model. Besides, the tool helps in extracting decision-making information from massive data.

Link: http://www.h2o.ai/

MLib

It an open-source, easy-to-use, and high performance machine learning library developed as part of Apache Spark. It is essentially easy to deploy and can run on existing Hadoop clusters and data. It also includes the relevant test procedures and data generators.

Salient features of MLib:

- Easy to use

- High performance

- Easy to deploy

The tool currently supports a variety of machine learning algorithms such as classification, regression, recommendation, clustering, and survival analysis. Furthermore, it can be applied across Python, Java, Scala, and R programming languages.

Link: https://spark.apache.org/mllib/

NuPIC Machine Intelligence

This open-source framework for machine learning that is based on Heirarchical Temporary Memory (HTM), a neocortex theory. The HTM program helps in analyzing real-time streaming data. It learns time-based patterns existing in data, besides predicting the imminent values as well as revealing any irregularities.

Notable features include:

- Prediction and modeling

- Real-time streaming data

- Hierarchical temporal memory

- Continuous online learning

- Temporal and spatial patterns

- Powerful anomaly detection

Link: http://numenta.org/

OpenCyc

Cyc is the world’s largest and most complete common knowledge base and common sense reasoning engine, and OpenCyc is the open source portal based on Cyc. It contains several carefully organized Cyc entries.

It finds application in areas such as:

- Rich domain modeling

- Domain-specific expert systems

- Text understanding

- Semantic data integration as well as AI games plus many more.

Link: http://www.cyc.com/platform/opencyc/

OpenNN

It is also an open-source class library written in C++ for deep learning, and used to develop neural networks. It focuses on the realization of neural network library. However, it is optimal for experienced C++ programmers and persons with tremendous machine learning skills

Characterized by a deep architecture and high performance, OpenNN can be used to implement any nonlinear model in the supervised learning scene. Besides, it also supports the design of neural networks with general approximation properties.

Link: http://www.opennn.net/

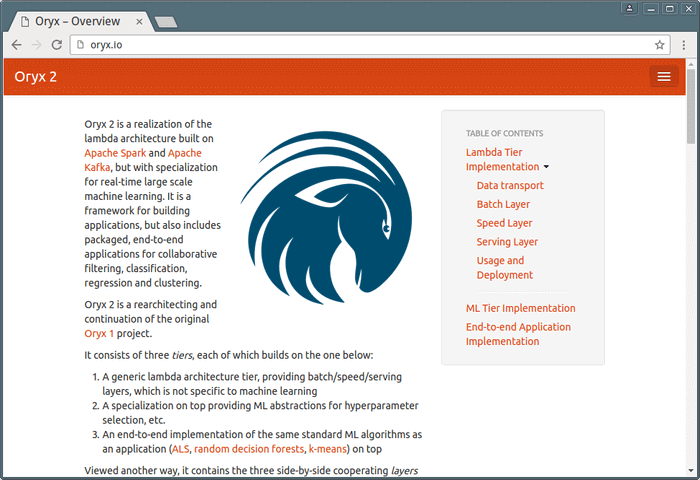

Oryx 2

Primarily a continuation to the initial Oryx project, Oryx 2 has been developed on Apache Spark and Apache Kafka. It was formerly known as Myrrix, prior to being acquired by big data company Cloudera, after which it was renamed to Oryx. Oryx 2 was re-architected on the lambda architecture, and is dedicated towards achieving real-time machine learning.

It focuses on large-scale machine learning, real-time performance prediction, and analysis framework. The platform is extensively used for application development.

Link: http://oryx.io/